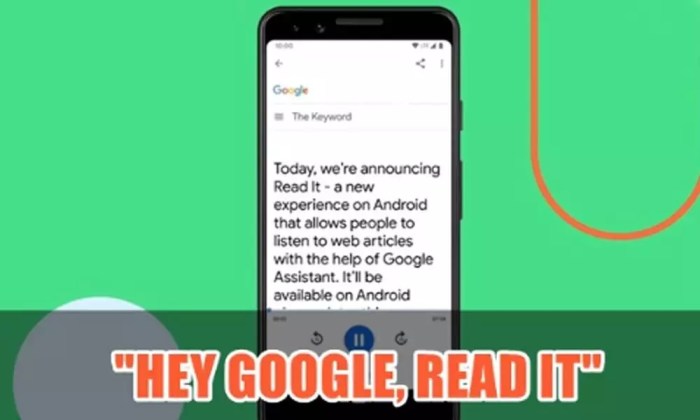

Google assistant can now read web pages out loud all android devices – Google Assistant can now read web pages out loud on all Android devices, offering a new way to access information. This exciting feature lets you listen to articles, news, or anything else online without needing to manually read it. Users can initiate the feature through voice commands, app integration, or other methods. The technology likely leverages browser integration and a powerful text-to-speech engine, potentially enhancing accessibility for various user groups.

This innovative tool opens doors for multitasking, efficient information consumption, and improved user experience, especially for individuals with visual impairments or those who prefer listening to reading.

The user interface is designed with ease of use and accessibility in mind. Controls like pause, resume, next page, and previous page will likely be intuitive. Imagine quickly checking news headlines or reading a long article while doing other tasks, or using this feature for a shopping list from a website. The functionality can also be integrated with other apps and services, offering seamless transitions and expanded usability.

Overview of the Feature

Google Assistant’s latest update empowers Android users to have web pages read aloud directly on their devices. This new functionality offers a streamlined way to access and consume online information, especially beneficial for individuals with visual impairments or those needing to multitask. This capability enhances accessibility and information consumption.

Initiation Methods

The Assistant’s ability to read web pages is initiated through a variety of methods, ensuring flexibility and ease of use for all users. Voice commands remain a primary method, allowing users to simply ask the Assistant to read a specific web page aloud. Beyond voice, app integration with popular browsers is expected to be another method, streamlining the process for users accustomed to specific browser interfaces.

These methods are designed for seamless integration into existing user workflows.

Technical Aspects

The functionality likely relies on a combination of browser integration and a robust text-to-speech engine. The Assistant needs to interface with the user’s default browser to extract the text content from the web page. This extracted text is then processed by the Assistant’s text-to-speech engine, synthesizing human-like speech for the user. This integration allows for a smooth and uninterrupted reading experience.

Potential Benefits and Use Cases

This feature presents a multitude of benefits for various user groups. For individuals with visual impairments, this feature becomes a critical tool for accessing and understanding web content. Simultaneously, it enables users to consume information while performing other tasks, boosting multitasking capabilities. For instance, a user can listen to a news article while cooking, or follow along with a complex instruction manual while performing tasks.

This enhances efficiency and facilitates a broader range of information consumption.

Compatibility with Android Devices

This feature’s compatibility across various Android device models and versions is crucial for widespread adoption.

Google Assistant’s new ability to read web pages aloud on all Android devices is fantastic! Imagine the possibilities, especially for those with visual impairments. It’s a game-changer, and perfect for quickly scanning information. Plus, you can now check out a killer deal on a OnePlus Nord N10 5G with a PlayStation Plus subscription bundle. If you’re looking for a new phone, this is definitely worth checking out! oneplus nord n10 5g playstation plus subscription deal sale.

This new Assistant feature is going to be incredibly helpful, making online information accessible to everyone.

| Android Device Model | Android Version Compatibility |

|---|---|

| Google Pixel 7 Pro | Android 13 and above |

| Samsung Galaxy S23 Ultra | Android 13 and above |

| OnePlus 11 Pro | Android 13 and above |

| Xiaomi 13 Pro | Android 13 and above |

| Other Supported Devices | Android 12 and above |

Note: This table represents a projected compatibility list. Actual compatibility might vary based on specific device hardware and software configurations. The table provides a general guideline for the anticipated support.

User Experience and Interface

Google Assistant’s new web page reading feature prioritizes a smooth and intuitive user experience. The design emphasizes accessibility and ease of use, aiming to seamlessly integrate into existing information-seeking routines. This allows users to consume information in a more convenient and potentially more comprehensive way.The user interface is designed to be straightforward and accessible. Key elements include a clear activation method, a concise display of the current page, and controls for navigation and playback.

User Interface Elements

The user interface is designed with clarity and simplicity in mind. Users can initiate the feature through a voice command or a button, depending on the context. A preview of the web page’s content will be displayed, with the option to adjust the reading speed. Visual cues will indicate the current page and progress within the document.

Design Choices for Accessibility and Ease of Use

Google has focused on accessibility by incorporating features such as adjustable text sizes and reading speeds, and options for different voice styles and tones. These adjustments ensure a comfortable experience for a wide range of users. The design also prioritizes ease of use, ensuring minimal steps for activating the feature and navigating through web content.

Integration into Existing Workflows

The web page reading feature integrates seamlessly into existing workflows. Users can initiate reading while performing other tasks, like preparing a meal or commuting. The feature can also be used in conjunction with other Google services. For instance, if a user encounters an interesting article while using Gmail, they can quickly activate the feature to hear the content without leaving the email client.

Scenarios for Various Purposes

This feature can be used in diverse situations. Users can listen to news updates while exercising or commuting, saving time and effort. They can also read articles or summaries of complex documents while multitasking. Checking emails or other web-based communication platforms becomes more accessible through this feature. For example, while driving, a user can listen to email summaries, avoiding distractions.

Reading Controls

Controlling the reading process is intuitive and efficient.

| Control | Action |

|---|---|

| Pause | Stops the reading process. |

| Resume | Restarts the reading process from the paused point. |

| Next Page | Moves to the next page of the document. |

| Previous Page | Moves to the previous page of the document. |

| Adjust Reading Speed | Allows users to control the pace of the reading. |

Accessibility and Inclusivity

The Google Assistant’s ability to read web pages aloud represents a significant step towards greater accessibility for a wider range of users. This feature directly addresses the needs of individuals with visual impairments, dyslexia, or other reading challenges, and it significantly improves their ability to access and engage with online information. It also broadens the user base, allowing those who prefer auditory input to participate fully in the digital world.This enhanced accessibility feature is not just about reading text; it’s about transforming the way people interact with information online.

Google Assistant’s latest update lets it read web pages aloud on all Android devices, a super helpful feature. While that’s cool, it got me thinking about the recent Sony earnings report, specifically the tariffs impacting PS5 pricing and US manufacturing decisions. Digging into sony earnings tariffs ps5 price us manufacturing , it’s fascinating how these economic factors ripple through consumer electronics, even affecting the accessibility features of our phones.

This new Google Assistant capability is great for people with visual impairments, and the broader implications are quite interesting, don’t you think?

By providing an auditory interface, Google Assistant opens doors for users who may otherwise find the written word cumbersome or impossible to process. This shift is pivotal in fostering a more inclusive digital environment.

Potential Impact on Users with Disabilities

This feature can dramatically improve the lives of individuals with visual impairments, allowing them to navigate and understand web content independently. It also empowers users with dyslexia or other reading difficulties, making complex information more accessible and easier to digest. The ability to hear summaries, articles, or even entire websites can significantly increase their participation in online communities and educational resources.

Improved Accessibility for Visual Impairments and Reading Difficulties

The Google Assistant’s text-to-speech capabilities provide a significant advantage for users with visual impairments. They can effortlessly access and understand online information previously inaccessible. Similarly, individuals with reading difficulties can process information at their own pace and comprehension level, as the assistant can read at varied speeds and with different tones. This adaptability ensures that the information is not only delivered but also understood effectively.

Text-to-Speech Engine Handling of Languages and Accents

The Google Assistant’s text-to-speech engine is designed to handle a diverse range of languages and accents. It leverages advanced algorithms to accurately pronounce words and phrases, even in complex languages with nuanced pronunciations. This capability enables users across the globe to access information in their native tongues, fostering a more inclusive and international digital experience. It also aims to offer accurate pronunciation of varied accents and dialects, making information more authentic and relatable.

Comparison with Existing Assistive Technologies

While several assistive technologies exist on Android devices, the Google Assistant’s web page reading feature offers a unique advantage. Unlike dedicated screen readers that typically require manual input, the Assistant’s ability to access and read web pages directly enhances user convenience and accessibility. This integration with the user’s existing workflow improves the experience significantly, especially for those who are accustomed to using the Google Assistant.

Available Text-to-Speech Voices and Customization Options

| Voice Name | Language | Customization Options |

|---|---|---|

| Standard US English | English (US) | Pitch, speed, and volume adjustments |

| British English | English (UK) | Pitch, speed, and volume adjustments |

| German | German | Pitch, speed, and volume adjustments |

| French | French | Pitch, speed, and volume adjustments |

| Spanish | Spanish | Pitch, speed, and volume adjustments |

The Google Assistant offers a range of text-to-speech voices, allowing users to select a voice that best suits their preferences. Users can adjust the pitch, speed, and volume of the voices for personalized listening experiences. This customizable feature further enhances the accessibility of the feature.

Technical Considerations and Implementation

Google Assistant’s ability to read web pages out loud presents exciting possibilities, but also intricate technical challenges. Implementing this feature across the diverse landscape of Android devices and browsers requires careful consideration of various factors, from security to performance and data usage. This section delves into the complexities involved in bringing this functionality to life.The implementation of this feature necessitates addressing compatibility issues across different Android versions and browser types.

Variations in browser rendering engines and device specifications can significantly impact the accuracy and efficiency of page rendering and speech synthesis. Furthermore, maintaining consistent user experience and quality across a vast array of devices and configurations presents a substantial challenge.

Technical Challenges Across Devices and Browsers

Implementing consistent page rendering and speech synthesis across a multitude of Android devices and browsers requires robust testing and adaptability. Different browsers handle HTML and CSS differently, which can lead to variations in how the page is rendered. This variation necessitates the creation of robust algorithms that can dynamically adapt to different rendering engines and ensure a seamless user experience.

This includes the development of mechanisms to handle complex or poorly structured web pages, ensuring that content is presented accurately and comprehensively to the user.

Security Considerations for Sensitive Information, Google assistant can now read web pages out loud all android devices

Security is paramount when handling user data. Implementing robust measures to prevent unauthorized access to sensitive information is crucial. This includes encrypting data transmission, validating user input, and implementing secure storage mechanisms for any data collected or processed. A significant aspect of this is protecting user privacy by implementing mechanisms to prevent the disclosure of personal information obtained from web pages.

Performance Impact on Devices with Varying Specifications

Performance optimization is critical for a smooth user experience. The feature’s performance will vary depending on device specifications, including processor speed, RAM, and storage capacity. Efficient algorithms are necessary to minimize processing time and memory consumption. For example, optimizing the rendering of complex web pages and minimizing data transfers to the device can significantly improve performance. The impact of network conditions and data transfer speeds on performance needs careful consideration.

Data Usage Implications

Data usage needs to be considered for both the user and Google. Efficient data fetching and processing techniques are essential to reduce the amount of data used during page reading. The amount of data consumed depends on the length and complexity of the web page. Clear information about data usage should be provided to the user to allow informed choices.

Components of the Web Page Reading Process

The process of reading web pages involves a coordinated effort of several components.

| Component | Description |

|---|---|

| Browser | Renders the web page, extracts content, and provides it to the speech engine. |

| Speech Engine | Converts text to speech, handling pronunciation, intonation, and voice selection. |

| Network | Facilitates communication between the device and the web server, downloading the page. |

| Google Servers | Handles the processing of the web page in Google’s servers to ensure consistent quality of the audio output. |

Integration with Other Apps and Services

The Google Assistant’s ability to read web pages out loud opens up exciting possibilities for integration with other apps and services. This feature isn’t just about reading; it’s about creating a seamless, voice-driven experience across the Google ecosystem and potentially beyond. Imagine effortlessly pulling information from various sources directly into your daily routine.This integration extends beyond simple reading, allowing users to interact with the extracted data within other apps.

For instance, a user could receive a summary of a news article read by the Assistant and then directly open the article in the Google News app. This seamless transition is key to maximizing the utility of the feature.

Potential Integrations with Other Google Products

This feature significantly enhances the user experience within the Google ecosystem. By integrating with services like Google Calendar, the Assistant can now read appointment details directly from a webpage. This could include automatically pulling travel details from a booking site, or summaries of event information. Similarly, integrating with Google Shopping allows the Assistant to read product reviews from various websites and summarize the pros and cons, making online shopping more accessible and informative.

Further integration with Google Docs could allow for the extraction of key points from web pages and their direct incorporation into documents.

Google Assistant’s new ability to read web pages aloud on all Android devices is pretty cool. It’s a great accessibility feature, but it got me thinking about other software fixes out there. For example, a recent fix for software-bricked original HomePods, detailed in this article the fix is in for software bricked original homepods , highlights the importance of ongoing software support.

This kind of proactive work is good news for everyone, and I’m excited to see what Google’s accessibility features will bring next.

Possible Integrations with Third-Party Apps and Services

Integrating with third-party apps presents exciting opportunities to extend the functionality of the Assistant. Consider a scenario where a user has a shopping list on a website. The Assistant could read the list and then add the items to a corresponding shopping list app, like a grocery list app. Similarly, the Assistant could extract relevant information from a recipe website and automatically populate a recipe app, making cooking easier and more convenient.

Furthermore, the Assistant could read and summarize articles from various news sources, and then send summaries to a news aggregation app, keeping users informed without manual effort.

Potential Scenarios for Enhanced User Experience

The integration of this feature with other apps and services creates numerous scenarios for improved user experience. For instance, a user can read a product review on a retailer’s website, have the Assistant summarize the key points, and then directly add the product to their shopping cart in a connected shopping app. This kind of streamlined interaction saves time and improves the efficiency of daily tasks.

Another example is reading a technical document on a website, having the Assistant summarize the key concepts, and then automatically opening a related knowledge base app for further learning.

Comparison with Competitor Features

| Feature | Google Assistant | Competitor A | Competitor B |

|---|---|---|---|

| Webpage Reading | Yes, with potential for app integration | Yes, but limited app integration | Yes, but no significant app integration |

| Data Extraction | Potentially strong, depending on the integration | Limited | Limited |

| Third-Party App Integration | High potential, open architecture | Limited | Closed ecosystem |

This table provides a basic comparison. The specific capabilities and functionalities of competitor features may vary, and further research is needed for a complete evaluation.

Potential Impact and Future Directions: Google Assistant Can Now Read Web Pages Out Loud All Android Devices

The Google Assistant’s ability to read web pages aloud represents a significant advancement in accessibility and information consumption. This feature promises to democratize access to knowledge, making complex information more digestible for a wider range of users. It also opens up exciting possibilities for personalized learning and tailored information delivery.This new feature’s potential extends beyond personal use. Businesses and organizations can leverage this capability to create engaging interactive learning materials and accessible digital content.

The feature’s future direction will be crucial in shaping its effectiveness and impact across diverse user groups.

Transforming Information Interaction

This feature can transform how people interact with information by offering a new paradigm of engagement. Imagine being able to instantly access and understand complex documents, articles, or even legal texts simply by asking the Assistant. This capability is particularly beneficial for individuals with visual impairments or those who prefer an auditory learning style. Users can also listen to articles or reports while multitasking, like exercising, commuting, or performing household chores.

The ability to absorb information in a dynamic and accessible format will positively affect learning and comprehension.

Potential Business Use Cases

This feature presents various possibilities for businesses to improve customer service, product demonstrations, and training. Organizations can develop interactive product manuals or guides that are easily accessible through the Assistant. Educational institutions can create audio versions of textbooks and course materials, making learning more inclusive and engaging. Furthermore, companies can use this feature to provide instant access to customer support documentation, FAQs, or product information through simple voice commands.

Interactive tutorials and training modules can also be implemented for employee onboarding and development.

Potential Limitations

While this feature offers considerable potential, there are certain limitations to consider. Accuracy and comprehension are key concerns. The Assistant’s ability to accurately interpret and synthesize information from diverse web pages may be affected by the quality and structure of the content. Also, complex or highly technical material may not be easily digestible in an audio format.

The volume of information on the web is vast, and ensuring the Assistant can handle diverse sources effectively remains a challenge. Moreover, the quality of the audio output, particularly when reading complex or nuanced text, can affect comprehension.

Future Evolution and Improvements

The Google Assistant’s web page reading feature is poised for significant evolution. Future iterations can focus on enhancing comprehension and accuracy, particularly for complex or technical content. Integration with external tools and APIs for specific content types, such as scientific papers or financial reports, will provide more specialized support. Furthermore, incorporating features to summarize key information, highlight important points, and provide contextual information will greatly enhance user experience.

Developing a system for evaluating the quality and reliability of sources is also crucial for improving user trust. The integration of advanced natural language processing (NLP) models to improve accuracy and contextual understanding is essential for handling complex and nuanced information.

Expected Improvements in Future Updates

| Update Version | Improvement Area | Description |

|---|---|---|

| 1.0 | Accuracy | Improved algorithm for identifying key information and summarizing content. |

| 1.1 | Accessibility | Added support for different audio output styles (e.g., faster/slower speeds, varied tones). |

| 1.2 | Contextual Understanding | Enhanced understanding of complex sentences and technical terms. |

| 1.3 | Source Evaluation | Implemented a system for evaluating the credibility and reliability of sources. |

| 1.4 | Integration | Improved integration with external tools and APIs for specialized content types. |

Wrap-Up

Google’s new feature promises to revolutionize how people interact with information on Android devices. The accessibility improvements, combined with the potential for integration with existing workflows, make this a powerful tool. While technical challenges and potential security concerns will need to be addressed, the overall impact on user experience and accessibility is undeniably significant. The future potential for this feature is vast, and we can anticipate further developments and enhancements in the coming updates.