Google AI Research Street View panorama photo editing is revolutionizing how we experience and interact with the digital world. This cutting-edge technology is transforming the way street view panoramas are captured, processed, and ultimately presented. From enhancing the visual quality of vast datasets to accurately stitching together multiple images, this research unveils sophisticated techniques, offering unparalleled clarity and detail in these often complex images.

The applications are vast, spanning from improved navigation to detailed urban planning, and the potential for future innovations is truly exciting.

This exploration delves into the intricate processes behind this technology, covering image enhancement, panorama stitching, data management, object recognition, and user experience considerations. It also tackles the ethical implications of manipulating such a vast dataset and examines the future of this innovative field.

Introduction to Google AI Research Street View Panorama Photo Editing

Google AI Research is actively exploring innovative ways to enhance and improve various aspects of image processing, including panorama images used in Google Street View. This involves not only refining existing techniques but also developing entirely new approaches to address the specific challenges posed by large-scale panorama datasets. This focus on panorama editing allows for greater accuracy, consistency, and user experience in Google Street View, which is critical for its continued utility and relevance.Street View panorama images are a critical component of the platform, capturing a 360-degree view of a location.

Google’s AI research on street view panorama photo editing is fascinating. Imagine the possibilities, from automatically stitching together vast landscapes to enhancing image clarity. It’s a bit like the immersive experience of tension experience ascension haunted house escape room maze bousman , but instead of jump scares, it’s about the intricate details of a virtual world.

This kind of photo editing could revolutionize how we explore and interact with the world through digital maps.

These panoramas are typically composed of multiple overlapping photographs stitched together. The images captured vary in resolution, lighting conditions, and perspective, which presents unique challenges for consistent rendering and user experience.

Types of Panorama Images in Street View

Street View utilizes various types of panorama images, each with its own characteristics and potential issues. These include images taken under different weather conditions, at varying times of day, and with diverse camera equipment. This diversity of capture conditions requires robust algorithms to maintain a consistent and aesthetically pleasing view.

Potential Applications of Panorama Photo Editing

Panorama photo editing in Google Street View offers numerous potential benefits. Improved image quality, including enhanced color accuracy and reduced distortion, leads to a more immersive and realistic user experience. Automatic removal of unwanted elements like graffiti, construction, or temporary obstructions can maintain the visual accuracy and consistency of the views.

Existing Tools and Techniques for Editing Street View Panorama Photos

Currently, a combination of software and manual processes is used to edit street view panoramas. Specialized software handles stitching and basic corrections. However, these methods may not always be effective in addressing complex issues like dynamic lighting variations or handling large-scale image sets. This necessitates the development of more advanced AI-driven solutions. A key challenge in existing approaches is the ability to handle large datasets of street view images efficiently and effectively.

Automated techniques for stitching and correcting panoramic images are vital for maintaining consistency and quality in Street View.

Google’s AI research into street view panorama photo editing is fascinating. It’s impressive how they’re pushing the boundaries of image processing, especially considering recent news about President Biden testing negative for COVID-19 after completing a course of Paxlovid, as reported here. This kind of innovative AI technology could potentially revolutionize how we view and interact with the world through these types of digital representations.

It’s exciting to see where this research takes us.

Image Enhancement Techniques for Street View Panoramas

Street View panoramas, with their wide field of view and potential for capturing intricate details, often face challenges related to image quality. This can include noise, color inconsistencies, and a lack of sharpness. Effective enhancement techniques are crucial for improving the overall visual appeal and usability of these images, enabling better navigation, analysis, and visual understanding. These techniques are vital for both research and public consumption of the data.Image enhancement techniques are applied to street view panoramas to address these challenges.

By using algorithms tailored to the specific characteristics of these large, high-resolution images, we can improve the clarity, color accuracy, and detail of the panoramas, ultimately improving their overall visual quality and usefulness.

Noise Reduction Techniques

Panorama images, particularly those captured in low-light conditions or with noisy sensors, can suffer from significant noise. Effective noise reduction is paramount for maintaining image quality and clarity. Various algorithms, such as Gaussian filtering and median filtering, are used to identify and remove noise artifacts while preserving the crucial details within the image. These algorithms work by smoothing out the image data, effectively reducing the impact of random variations in pixel values.

For example, Gaussian filtering weights pixels based on their distance from the central pixel, giving more weight to neighboring pixels. Median filtering, on the other hand, replaces each pixel with the median value of its neighboring pixels, which can be more effective at removing sharp, impulsive noise.

Color Correction Techniques, Google ai research street view panorama photo editing

Color inconsistencies within street view panoramas can stem from variations in lighting conditions across the scene, causing inaccurate or unnatural color representations. Color correction techniques aim to compensate for these inconsistencies, resulting in a more accurate and visually appealing representation of the real-world scene. These techniques often involve adjusting the color balance and white balance of the image.

Color correction algorithms can be applied in various ways, such as using color histograms to identify and correct imbalances in color distribution, or using a reference color palette to establish a consistent color representation across the entire panorama. This ensures a more natural and consistent color representation throughout the entire image.

Sharpness Adjustments

The sharpness of street view panoramas can be enhanced using algorithms designed to amplify edges and details within the image. This often involves increasing the contrast between different parts of the image. Techniques such as high-pass filtering and unsharp masking can effectively sharpen details and increase the overall clarity of the image. These methods can be particularly helpful in bringing out fine details that might otherwise be lost in the image.

For example, applying high-pass filtering to an image enhances the contrast between edges, making fine details more pronounced and the image appear sharper.

Challenges of Applying Techniques to Large-Scale Datasets

Working with large-scale panorama datasets presents significant computational challenges. The sheer volume of data necessitates the use of efficient algorithms and parallel processing techniques to ensure timely completion of the enhancement process. The size of the data necessitates careful consideration of memory management and data storage to prevent performance bottlenecks. Furthermore, maintaining the integrity and accuracy of the panorama’s perspective and stitching during the enhancement process requires specialized algorithms to prevent distortion.

Examples include image warping, which could potentially cause problems in the stitching process.

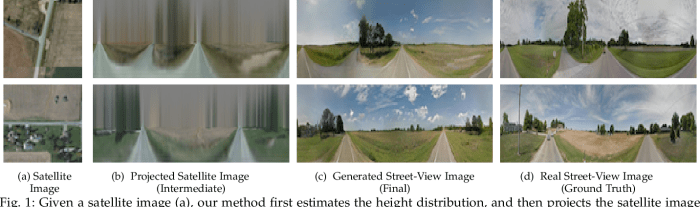

Panorama Stitching and Alignment

Panorama stitching, the process of seamlessly joining multiple overlapping images into a single, expansive view, is crucial for creating high-resolution, immersive street view panoramas. This process, while seemingly straightforward, relies heavily on precise alignment techniques to ensure a visually appealing and accurate representation of the scene. Errors in alignment can lead to noticeable distortions, seams, or even complete image rejection.

Robust alignment algorithms are therefore essential for generating compelling and useful street view panoramas.Accurate alignment is paramount in panorama stitching. Slight misalignments, even those imperceptible to the naked eye, can manifest as visible seams or distortions in the final panorama. These imperfections detract from the immersive experience and can compromise the reliability of the data captured in the panorama.

Precise alignment algorithms are designed to minimize these errors, producing a cohesive and visually appealing result.

Image Feature Detection and Matching

Panorama stitching hinges on the ability to precisely match corresponding features across the overlapping images. This process begins with detecting prominent features, such as corners, edges, or texture patterns, within each image. Algorithms employing techniques like Scale-Invariant Feature Transform (SIFT) or Speeded Up Robust Features (SURF) are commonly used for this task. These algorithms extract features that are robust to changes in scale, rotation, and illumination, enabling accurate matching across images.

Transformation Estimation

After identifying matching features, the next crucial step is to estimate the transformation between the images. This transformation, typically represented as a homography matrix, describes the geometric relationship between the two images, including translation, rotation, and scaling. The accuracy of this transformation directly impacts the quality of the stitched panorama. Various techniques exist for estimating this transformation, often involving least-squares optimization or robust estimators to mitigate the influence of outliers.

These techniques consider the spatial relationship between matching features to establish a precise transformation.

Image Warping and Blending

Once the transformation is established, the images need to be warped to align with each other. This involves resampling pixels from the source images to their corresponding positions in the target image. This step ensures that the features from each image seamlessly overlap. The crucial aspect here is the use of interpolation techniques. High-quality interpolation methods ensure smooth transitions and minimize artifacts at the seams.

After warping, blending techniques, such as linear blending or more advanced methods like Poisson blending, are applied to smoothly combine the warped images, effectively eliminating the visible seams.

Common Errors and Solutions in Panorama Stitching

- Incorrect Feature Matching: Inaccurate feature matching can lead to misalignment, resulting in visible seams and distortions. Using robust feature detectors and matching algorithms can help mitigate this. Employing more sophisticated matching techniques, such as considering the context of features, or utilizing multiple feature detectors, is crucial for accuracy.

- Insufficient Overlap: Insufficient overlap between images can make accurate alignment challenging. This can result in incomplete or distorted panoramas. Optimizing the camera’s position and orientation to capture sufficient overlap is essential.

- Noise and Distortion: Noise in the images or lens distortion can affect the accuracy of feature detection and matching, leading to inaccuracies in the stitching process. Robust image preprocessing techniques, such as noise reduction and distortion correction, can address this issue.

- Geometric Errors: Errors in the estimated geometric transformation (e.g., homography) can lead to significant distortions in the stitched panorama. Refining the alignment process by using more advanced techniques, such as utilizing multiple image pairs for triangulation or incorporating additional constraints, is crucial.

Data Handling and Management in Panorama Editing

Managing massive datasets of street view panorama images requires sophisticated strategies for storage, compression, and efficient access. This is crucial for both the computational resources needed for processing and the sheer volume of data involved. Effective data management is essential to make panorama editing and analysis practical and scalable.Panorama editing tools often deal with petabytes of image data, making traditional file management systems inadequate.

Robust solutions are needed to store, access, and process these images without compromising performance.

Storage Methods for Large Panorama Collections

Handling vast collections of street view panoramas necessitates specialized storage solutions. Distributed file systems, such as Hadoop Distributed File System (HDFS), are well-suited for storing and managing the enormous quantities of data. These systems distribute data across multiple servers, enhancing storage capacity and enabling parallel processing. Cloud storage services also provide scalable and cost-effective solutions, offering the flexibility to adjust storage capacity based on demand.

Furthermore, optimized storage formats designed specifically for high-resolution images, such as optimized JPEG 2000 (JPEG 2000), can be advantageous.

Data Compression and Optimization

Minimizing storage space and processing time is vital for large-scale panorama editing. Image compression techniques play a significant role in achieving this. Lossy compression algorithms, like JPEG, are frequently used for reducing file size, although some image quality may be sacrificed. Lossless compression methods, such as PNG or ZIP, preserve the original image quality but might not offer the same level of reduction in file size.

The choice of compression algorithm depends on the acceptable trade-off between image quality and storage space. For instance, in scenarios where minor quality degradation is acceptable, JPEG compression can yield substantial savings. Conversely, for archival purposes or when absolute image fidelity is critical, lossless compression is preferred.

Efficient Access and Processing of Large Datasets

Efficient access and processing of massive panorama datasets are critical for rapid analysis and editing. Techniques like database indexing, optimized data structures, and parallel processing are instrumental in improving access times. Databases designed to handle geospatial data can be especially useful for querying and retrieving panoramas based on location or other criteria. Furthermore, using specialized image processing libraries or frameworks, which are optimized for handling large datasets, accelerates the processing of these massive panoramas.

For example, libraries like OpenCV can be employed to expedite tasks like stitching and alignment.

Example of a Data Management Workflow

A common workflow involves storing panorama images in a distributed file system like HDFS. These images are then processed in batches using specialized image processing libraries like OpenCV, which are optimized for handling large datasets. Results, including edited panoramas, are saved back into the same storage system. This structured workflow facilitates efficient management and retrieval of the processed data.

Object Detection and Recognition in Panoramic Images

Panorama images, encompassing a wide field of view, present unique challenges for object detection and recognition compared to standard images. Extracting meaningful information from the expansive datasets requires robust techniques capable of handling the inherent distortions and complexities of panoramic stitching. Machine learning algorithms play a crucial role in achieving this, enabling the identification and classification of objects within these expansive visual landscapes.

Machine Learning for Object Detection in Panoramic Images

Machine learning models, particularly deep convolutional neural networks (CNNs), excel at learning intricate patterns and features within images. Applying these models to panoramic images involves careful consideration of the image’s unique characteristics. Pre-processing steps, such as correcting for geometric distortions introduced during stitching, are often necessary before feeding the data to the model. This pre-processing ensures the model learns accurate representations of objects, regardless of their position within the panoramic view.

Identifying and Classifying Objects in Panorama Images

Identifying and classifying objects in panoramic images requires a multi-step approach. Firstly, the panorama must be segmented into smaller, manageable regions. This segmentation allows for localized analysis of object presence and characteristics within each region. Secondly, robust object detection algorithms are employed within each segmented region. The output from these algorithms provides a list of potential object locations and associated bounding boxes.

Finally, a classification process, often based on the features extracted from the bounding box regions, determines the type of each detected object. This classification can be refined by considering contextual information from neighboring regions within the panorama. For example, recognizing a “traffic light” requires analyzing the surrounding environment to ensure the object is indeed a traffic light and not a similar-looking object in a different context.

Applying Object Recognition to Street View Data

Applying object recognition to street view data offers significant advantages. Accurate detection of traffic signs, pedestrians, vehicles, and cyclists can improve traffic management systems, urban planning, and safety monitoring. For instance, automated analysis of street view panoramas can identify areas with high pedestrian traffic, which can inform the placement of pedestrian crossings or the design of safer sidewalks.

Similarly, detecting parked vehicles can aid in traffic flow analysis and parking space management. The ability to automatically count and classify objects like bicycles or cars can support urban planning initiatives and infrastructure development.

Google’s AI research into street view panorama photo editing is fascinating, especially considering the sheer volume of data involved. Think about how much easier it would be to manage and edit these massive photos if you had a powerful machine like a Mac Studio, with its unique port configuration including HDMI and USB-C, and the accompanying dongles. This Mac Studio design, however, is not directly involved in the AI editing process itself.

Ultimately, AI still plays a crucial role in improving the quality and efficiency of managing these massive street view panorama images.

Object Recognition Algorithms for Panorama Images

Various algorithms are employed for object recognition in panoramic images. Choosing the appropriate algorithm depends on factors like the specific objects to be recognized, the desired accuracy, and the computational resources available. The table below Artikels some commonly used algorithms.

| Algorithm | Description | Strengths | Weaknesses |

|---|---|---|---|

| Region-based Convolutional Neural Networks (R-CNN) | Detects objects by proposing regions of interest and classifying them. | Effective for a wide range of objects; relatively accurate. | Computationally intensive; may struggle with complex or highly overlapping objects. |

| You Only Look Once (YOLO) | Predicts bounding boxes and class probabilities directly from the input image. | Fast and efficient; suitable for real-time applications. | Less accurate than R-CNN-based methods in some cases; may have difficulty with small objects. |

| Single Shot MultiBox Detector (SSD) | Combines aspects of R-CNN and YOLO, offering a balance between speed and accuracy. | Faster than R-CNN while maintaining reasonable accuracy; handles various object scales effectively. | Performance may vary depending on the specific implementation and dataset. |

User Experience Considerations for Panorama Editing

Designing intuitive and user-friendly panorama editing tools is crucial for widespread adoption. A well-designed interface can significantly reduce the learning curve, allowing users to quickly grasp the editing features and achieve desired results. This section explores key user experience considerations for creating effective panorama editing software.

User Interface Design for Panorama Editing Tools

A user-friendly interface is paramount for panorama editing tools. The design should prioritize clarity, simplicity, and efficiency. Users should be able to quickly locate and utilize the available editing features. Visual cues and clear labeling are essential to guide users through the process. Consider incorporating interactive elements like tooltips and contextual help, which provide users with information on how to use specific features.

The interface should also adapt to different screen sizes and devices, ensuring a consistent experience across various platforms.

Design Principles for Intuitive and User-Friendly Panorama Editing

Several design principles contribute to intuitive panorama editing. A consistent layout and color scheme across different tools and functionalities foster familiarity and ease of use. Clear visual hierarchies and intuitive navigation help users locate desired features. The layout should be adaptable to accommodate different screen sizes, providing a seamless user experience on desktops, tablets, and smartphones. The overall design should emphasize efficiency, allowing users to accomplish tasks with minimal steps.

Feedback mechanisms, such as visual indicators and clear notifications, are essential for confirming actions and providing timely updates.

Accessibility and Usability in Panorama Editing

Accessibility is vital for inclusive design. Panorama editing software should be usable by individuals with diverse abilities. This involves designing the interface with support for screen readers, keyboard navigation, and alternative input methods. Consider providing options for adjusting font sizes, colors, and contrast levels. The interface should also be optimized for users with motor impairments, offering support for assistive technologies.

Comprehensive usability testing with diverse user groups is critical to identify and address potential accessibility issues. Thorough testing ensures that the interface works effectively for all users.

User Interface Elements for Panorama Editing Software

This section Artikels key interface elements for panorama editing software.

| Element | Description | Function | Example |

|---|---|---|---|

| Panorama Display | A visual representation of the entire panorama image. | Displays the stitched image for editing. | A large, scrollable view of the panorama, allowing for precise adjustments. |

| Tool Panel | A collection of tools for image manipulation. | Provides options for adjustments, such as brightness, contrast, and saturation. | A set of buttons and sliders for manipulating the image. |

| Navigation Controls | Buttons or gestures for zooming, panning, and rotating the panorama. | Allows users to navigate and select specific areas within the panorama. | Zoom in/out buttons, pan arrows, and rotate handles. |

| Adjustment Sliders | Sliders for adjusting various image parameters. | Allows precise control over image adjustments, like brightness, contrast, and saturation. | Sliders for brightness, contrast, saturation, and sharpness. |

| Object Selection Tools | Tools for selecting and manipulating objects within the panorama. | Enables targeted editing of specific parts of the panorama. | Selection tools like lasso, polygon, or magic wand. |

Ethical Implications of Panorama Photo Editing: Google Ai Research Street View Panorama Photo Editing

Street view panoramas, with their comprehensive perspective, offer valuable insights into urban landscapes and everyday life. However, the ability to manipulate these images raises significant ethical concerns. Careful consideration of these implications is crucial for maintaining public trust and ensuring the responsible use of this powerful technology.Panorama editing, while offering potential benefits like enhancing visibility or removing unwanted elements, carries the risk of distorting reality and impacting public perception.

Maintaining accuracy and transparency is paramount to ensuring the trustworthiness of these visual records.

Potential for Misrepresentation

The ease with which panoramas can be edited presents a risk of misrepresenting the truth. For example, removing a building from a historical street view panorama could alter the historical record, potentially obscuring significant architectural changes or even masking the presence of a previously documented structure. This alteration of the visual record can have significant implications for urban planning, historical research, and community understanding.

Impact on Public Perception

Panorama editing can subtly influence public perception of locations and communities. By altering the visual representation of a neighborhood, for instance, editors might inadvertently reinforce or challenge existing stereotypes. A manipulated image might downplay the presence of poverty or crime, or conversely, overemphasize them.

Importance of Transparency and Accuracy

Maintaining transparency and accuracy in panorama editing is essential for upholding the integrity of the data. Users should be clearly informed when an image has been edited, and the nature of the edits should be documented and easily accessible. This transparency allows users to critically evaluate the presented information and understand any potential biases or distortions. Furthermore, accurate representations are vital for preserving the historical and social context of the locations depicted.

Guidelines for Responsible Panorama Photo Editing Practices

Responsible panorama photo editing requires a commitment to ethical considerations. These guidelines should be implemented to ensure trustworthiness and accuracy:

- Explicit Documentation of Edits: Every alteration to a panorama should be meticulously documented, including the reason for the change and the specific areas modified. This documentation should be readily accessible to users, enabling them to critically assess the data.

- Clear Indication of Modifications: A visual or textual indication of any editing should be incorporated into the final panorama. This allows users to understand the extent and nature of alterations. This could include watermarks, annotations, or overlayed information.

- Review and Validation Processes: Independent review and validation procedures should be implemented to assess the accuracy and integrity of edited panoramas. This process should involve experts in the field and stakeholders from the affected communities.

Preserving Historical Context

Panoramas offer a unique historical perspective. When editing, preserving the historical context is paramount. Removing or altering elements that provide insights into past developments, societal changes, or architectural styles can diminish the value of the visual record.

Avoiding Bias in Editing

Careful consideration of potential biases is crucial. Edit decisions should be based on objective criteria and not influenced by personal opinions or preconceived notions. This includes avoiding the manipulation of panoramas to reinforce or challenge pre-existing stereotypes about specific communities or regions. Editors should strive to present an unbiased and accurate representation of the location.

Future Trends and Research Directions

Panorama photo editing is rapidly evolving, driven by advancements in AI and computational power. The increasing demand for high-quality, immersive imagery across various applications, from virtual tourism to augmented reality, necessitates innovative solutions for manipulating and enhancing panoramic views. This section explores the emerging trends and potential future research directions in this field.

Emerging Trends in Panorama Photo Editing

The field is witnessing a shift towards automated and intelligent solutions for panorama editing. Real-time processing, enhanced user interfaces, and integration with other technologies are key trends. Users increasingly desire streamlined workflows and intuitive tools for manipulating panoramic images.

Potential Future Research Directions

Several research directions hold significant potential for improving panorama photo editing. These include developing more sophisticated AI models for automatic panorama enhancement, investigating novel algorithms for seamless panorama stitching and alignment, and exploring the application of deep learning for object detection and recognition in panoramic images.

Advancements in Technology and Their Impact

The expected advancements in hardware and software will have a profound impact on panorama editing. Faster processors and more efficient algorithms will enable real-time panorama processing, allowing users to interactively manipulate images without noticeable delays. Improved image sensors and camera technologies will capture higher resolution and dynamic range panoramic images, requiring more sophisticated editing tools.

Role of AI in Shaping the Future of Panorama Photo Editing

AI will play a pivotal role in the future of panorama photo editing. AI-powered tools can automatically enhance the visual quality of panoramas by improving color balance, contrast, and sharpness. They can also identify and remove unwanted elements, such as noise or distortions, and perform complex manipulations such as object replacement and background modification. Furthermore, AI can facilitate the creation of personalized panorama experiences by tailoring edits to individual preferences.

For example, AI could automatically adjust the lighting and colors of a panorama to match the user’s desired mood or style.

Last Point

In conclusion, Google AI Research’s approach to street view panorama photo editing showcases a remarkable blend of technical prowess and thoughtful consideration for the user experience. From the intricate algorithms used to enhance image quality to the sophisticated techniques for stitching panoramas, the future of street view applications is undoubtedly brighter than ever. Ethical considerations and potential future research directions will continue to shape the development of this field, promising even more impressive advancements in the years to come.