Docker Kubernetes containers explained unravels the intricacies of these powerful technologies. Containers, essentially lightweight virtual machines, package applications and their dependencies, simplifying deployment and management. Kubernetes, a container orchestration platform, automates the deployment, scaling, and management of containerized applications across clusters of machines. This guide explores the fundamental concepts of Docker, Kubernetes, and containers, delving into their individual roles, benefits, and integration.

We’ll start with a clear explanation of what Docker, Kubernetes, and containers are, and how they relate. This will be followed by a detailed look at Docker’s image building process, Dockerfile syntax, and networking. Then, we’ll examine Kubernetes’ core components and functionalities, including the control plane, deployment management, and scaling. We’ll explore how Docker and Kubernetes work together, covering deployment strategies and comparisons with other orchestration tools.

Practical examples, like a web server deployment, will demonstrate the practical application of these technologies. We’ll wrap up with critical security considerations and real-world use cases.

Introduction to Docker, Kubernetes, and Containers: Docker Kubernetes Containers Explained

Containers are revolutionizing software development and deployment. They provide a lightweight, portable, and consistent environment for applications, enabling faster development cycles and improved scalability. Docker and Kubernetes are two key technologies driving this container revolution. Docker simplifies the creation and management of containers, while Kubernetes orchestrates and manages them at scale, providing a powerful platform for deploying and scaling applications in production environments.Docker, Kubernetes, and containers are interconnected, each playing a distinct role in the modern software stack.

Ever wondered how Docker and Kubernetes containers work? It’s like having tiny, self-contained programs that can run on any computer. But, how about the power needs for a virtual island in Fortnite? To understand the complex charging systems needed, check out this article on what kind of charging infrastructure does the fortnite island have. It’s fascinating to consider how real-world infrastructure concepts can be adapted to virtual environments, even for things like running Docker Kubernetes containers.

Containers encapsulate applications and their dependencies, Docker simplifies the process of creating and managing those containers, and Kubernetes orchestrates and manages large numbers of containers across a cluster of machines. This allows developers to focus on building applications without worrying about the underlying infrastructure.

Understanding Containers

Containers are lightweight, isolated environments that package an application and its dependencies. They run on top of the operating system kernel, sharing the host operating system kernel, unlike virtual machines which create a complete virtualized environment. This makes them significantly more efficient than virtual machines, using fewer resources and offering faster startup times. The isolated nature of containers helps prevent conflicts between applications running on the same host.

This isolation is critical for maintaining application stability and reliability in complex, multi-application environments.

Benefits of Containerization

Containerization offers several advantages over traditional methods of deployment. Increased efficiency is achieved by sharing the host operating system kernel, leading to resource optimization and reduced overhead. Portability is another key benefit; applications running in a container can be easily moved between different environments without modification. Consistency is guaranteed across environments, which is crucial for maintaining a reliable application experience.

Moreover, the isolated nature of containers fosters a more stable and reliable environment, preventing conflicts between applications and improving security.

Comparing Docker, Kubernetes, and Containers

| Feature | Docker | Kubernetes | Containers |

|---|---|---|---|

| Definition | A platform for creating, managing, and deploying containerized applications. | An orchestration platform for managing and scaling containerized applications. | Lightweight, isolated environments encapsulating applications and their dependencies. |

| Role | Creates and manages individual containers. | Orchestrates and manages clusters of containers. | Run applications in isolated environments. |

| Focus | Containerization of applications | Scaling, deployment, and management of containerized applications across multiple servers. | Encapsulation of application dependencies |

| Analogy | A standardized shipping container for moving goods. | A logistics company managing a large fleet of shipping containers. | The goods being shipped in the container. |

The table above highlights the key distinctions between Docker, Kubernetes, and containers. Docker provides the fundamental building blocks (containers), Kubernetes manages the complex deployment and scaling of these containers, and containers themselves encapsulate the applications and their dependencies. This separation of concerns allows for efficient development and deployment of applications at scale.

Docker vs. Virtual Machines

A key difference between containers and virtual machines lies in their resource utilization. Virtual machines create a complete virtualized environment, including their own operating system, while containers share the host operating system kernel. This shared kernel results in a significant reduction in resource consumption. A simple analogy is that containers are like shipping containers stacked on a single truck (the host OS), while virtual machines are like separate trucks for each application.

This leads to significant efficiency gains and cost savings in terms of hardware requirements.

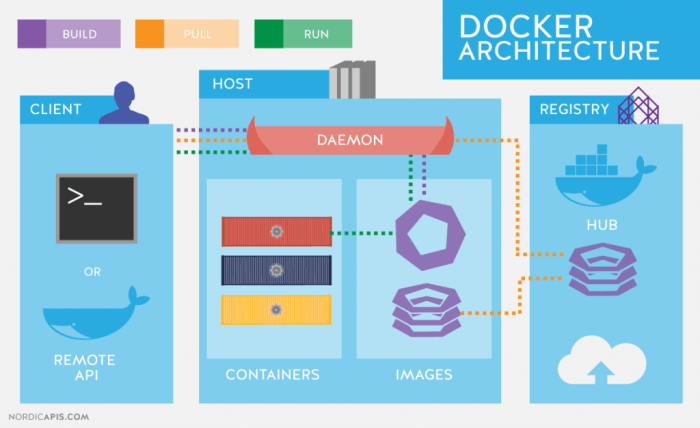

Docker Fundamentals

Docker empowers developers to package applications and their dependencies into lightweight, portable containers. This allows for consistent execution across various environments, from development to production. This section delves into the core concepts of Docker, focusing on image building, storage, and networking.Understanding Docker images is crucial for effectively utilizing Docker. Images are read-only templates that define the application environment.

They are built from layers, enabling efficient storage and update management.

Docker Image Building Process

The Docker image building process involves creating a layered structure, where each layer represents a distinct change or instruction from the previous one. This layering allows Docker to efficiently manage image updates and reduce storage overhead.

- Base Image: The foundation of the image, often a pre-built Linux distribution (like Alpine Linux or Ubuntu). It provides the core operating system and essential libraries.

- Layer-by-Layer Construction: Each subsequent instruction in the Dockerfile adds a new layer to the image. This could involve installing packages, copying files, or setting environment variables. This incremental process is crucial for efficient image creation and management.

- Caching and Optimization: Docker leverages caching to significantly speed up the build process. If a layer hasn’t changed since the last build, Docker reuses it, avoiding redundant work.

- Read-Only Layers: Docker images are immutable. Layers are read-only, ensuring data consistency and preventing accidental modification. Changes are applied on top as new layers.

Dockerfile Syntax

The Dockerfile is a text document containing instructions to assemble a Docker image. It defines the steps to build an image, from the base image to the final application configuration. A crucial aspect of this is using standard commands and instructions for consistency.

FROM: Specifies the base image. For example,FROM ubuntu:latest. The latest tag is the most recent version of the Ubuntu image.RUN: Executes commands within the container. For example,RUN apt-get update && apt-get install -y nginx.COPY: Copies files from the host to the container. For example,COPY . /usr/share/nginx/html.CMD: Specifies the command to run when the container starts. For example,CMD ["nginx", "-g", "daemon off;"].

Creating a Docker Image from a Simple Application

A simple web server application can demonstrate Docker image creation. Consider an `index.html` file in the current directory.“`dockerfileFROM nginx:latestCOPY index.html /usr/share/nginx/htmlCMD [“nginx”, “-g”, “daemon off;”]“`This Dockerfile first pulls the `nginx:latest` image. It then copies the `index.html` file to the web server’s document root. Finally, it runs the Nginx server.

Docker Image Storage Options

Different storage options affect the speed and efficiency of Docker image management.

| Storage Option | Description | Advantages | Disadvantages |

|---|---|---|---|

| Local Storage | Stores images directly on the host machine. | Simple, fast access. | Limited capacity, potential for conflicts. |

| Docker Hub | Centralized repository for public images. | Widely accessible, large selection of images. | Requires internet access for downloads. |

| Private Registries | Custom repositories for storing and sharing images. | Enhanced security, control over image distribution. | Requires setup and management. |

Docker Networking Concepts

Docker networking enables containers to communicate with each other and with the host machine. Understanding these concepts is crucial for designing robust and scalable containerized applications.

- Container Networking Interfaces: Docker provides several networking options, including the default bridge network, host networking, and overlay networks.

- Networking Configurations: These configurations determine how containers connect and exchange data. This includes defining ports, networks, and IP addresses.

- Networking Modes: Different networking modes offer varying levels of isolation and communication capabilities. This enables tailored configurations for specific application requirements.

Kubernetes Fundamentals

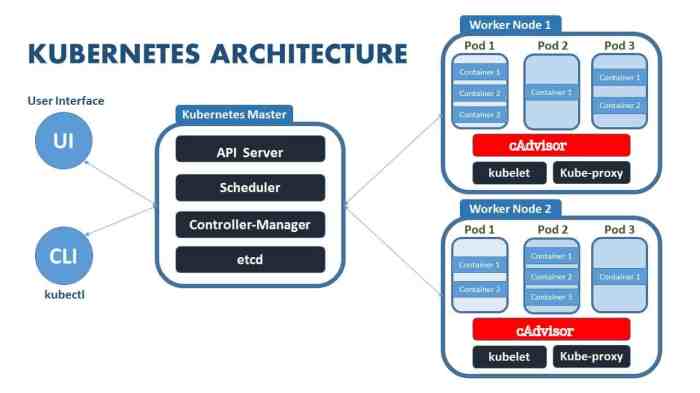

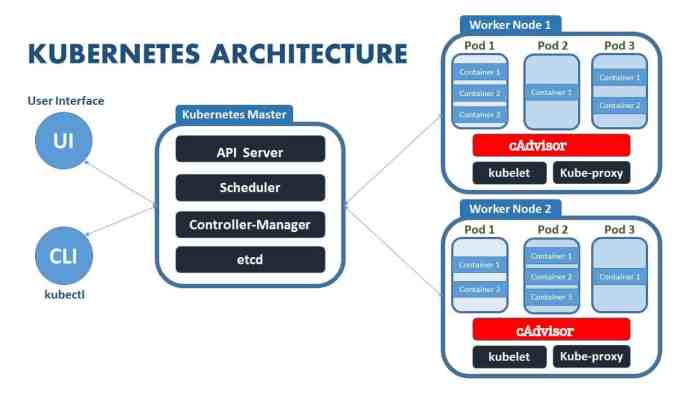

Kubernetes, often abbreviated as K8s, is a powerful platform for automating deployment, scaling, and management of containerized applications. It orchestrates containerized workloads across clusters of machines, providing a robust and efficient environment for modern application development and deployment. Understanding the core components of a Kubernetes cluster is crucial for effectively leveraging its capabilities.The fundamental components of Kubernetes work together seamlessly to manage containerized applications.

This involves handling tasks from deployment to scaling and maintenance, allowing developers to focus on application logic rather than infrastructure complexities. The system’s scalability and fault tolerance are vital features, enabling consistent performance even under increasing demand or unexpected failures.

Docker, Kubernetes, and containers are revolutionizing application deployment, making it easier and faster. While the technicalities can be complex, the underlying principles are straightforward. Think of it like organizing your digital files. This efficiency extends to security, but it’s interesting to note how the USA Freedom Act, signed by Obama in 2015, potentially impacted data handling by the NSA , impacting digital privacy in a different way.

Ultimately, these technologies and legal frameworks are intertwined, influencing how we approach data management and digital infrastructure.

Core Components of a Kubernetes Cluster

Kubernetes clusters are built upon several key components that work in concert to manage containerized applications. These components include Pods, Deployments, Services, and Nodes.

- Pods: Pods are the fundamental building blocks of Kubernetes. They represent a group of one or more containers that share resources and run in the same network namespace. A Pod acts as a single unit of deployment, and failures within a Pod trigger automatic restarts. This ensures continuous operation and high availability.

- Deployments: Deployments manage the lifecycle of Pods. They define how many replicas of a Pod should run and automatically replace any failed or unhealthy Pods. This ensures high availability and self-healing capabilities. Deployments are crucial for managing application deployments in a robust and scalable manner.

- Services: Services provide a stable network address for Pods, even when Pods are added or removed. This ensures applications can communicate with each other reliably. Kubernetes Services act as a load balancer and a means to expose the applications running inside the Pods to the outside world.

- Nodes: Nodes are physical or virtual machines that run Pods. They provide the computational resources and storage for containers to run. A Kubernetes cluster typically consists of multiple Nodes, each contributing to the overall capacity and fault tolerance of the system. Nodes form the foundation on which the cluster operates.

Kubernetes Control Plane

The Kubernetes control plane is the central management system for the cluster. It comprises several components that work together to orchestrate the entire system.

Ever wondered how Docker and Kubernetes containers work? It’s all about efficient app deployment. While that’s fascinating, did you know that Lyft Pink subscription has some amazing price discounts and perks? Checking out the details on lyft pink subscription price discount perks is a good way to see the benefits. Ultimately, though, mastering containers is crucial for modern app development.

- API Server: The API server is the primary interface for interacting with the Kubernetes cluster. It exposes a RESTful API that allows users and other tools to manage resources and interact with the cluster.

- Scheduler: The scheduler assigns Pods to available Nodes in the cluster based on resource availability and other constraints. This ensures optimal resource utilization and efficient workload distribution.

- Controller Manager: The controller manager manages various Kubernetes components, including Deployments, Services, and Replication Controllers. It ensures that the desired state of the cluster is maintained by actively monitoring and adjusting the system’s resources.

Container Deployment and Scaling

Kubernetes manages container deployments through declarative configurations. Users specify the desired state of their applications, and Kubernetes automatically ensures that the cluster matches that state. Scaling is achieved by defining desired numbers of replicas for Pods. Kubernetes dynamically adjusts the number of running Pods to match the demand, optimizing resource usage.

Kubernetes Networking and Service Discovery, Docker kubernetes containers explained

Kubernetes networking provides a robust and flexible network infrastructure for containerized applications. It allows Pods to communicate with each other and with external services. Service discovery mechanisms, such as DNS, enable applications to locate and communicate with other services within the cluster without needing to know their specific IP addresses.

Docker and Kubernetes Integration

Docker and Kubernetes are powerful tools for containerization and orchestration, respectively. Their combined use unlocks significant advantages in deploying, scaling, and managing applications. This section delves into the synergy between Docker images and Kubernetes deployments, outlining the deployment process and contrasting approaches to container orchestration. We’ll also analyze the benefits and drawbacks of this integrated approach.Kubernetes leverages Docker images as the building blocks for its containerized applications.

These images contain the necessary code, dependencies, and runtime environments needed to execute an application. Kubernetes orchestrates these images, managing their lifecycle, scaling, and resource allocation.

Docker Images in Kubernetes Deployments

Docker images are the fundamental units of deployment in Kubernetes. A Kubernetes deployment defines how many copies of a containerized application should run and how to manage those instances. Kubernetes pods, the smallest deployable unit in Kubernetes, run one or more containers, each defined by a Docker image. The Kubernetes scheduler places these pods on appropriate nodes based on resource availability and constraints.

Deploying a Docker Application to Kubernetes

Deploying a Docker application to Kubernetes involves several key steps. First, you build the Docker image from your application code. Next, you push the image to a container registry, such as Docker Hub, Google Container Registry, or Amazon Elastic Container Registry. Then, you define a Kubernetes deployment manifest that specifies the desired state of your application, including the Docker image name, resources required, and replication strategy.

Finally, you apply the deployment manifest to your Kubernetes cluster, and Kubernetes will automatically manage the deployment, scaling, and updates of your application.

Comparing Container Orchestration Approaches

Various container orchestration tools exist, each with its own strengths and weaknesses. Docker Swarm is a simpler, more lightweight orchestration system compared to Kubernetes. It’s well-suited for smaller deployments and development environments. Kubernetes, on the other hand, provides more advanced features, including robust scaling, service discovery, and complex networking, making it suitable for larger and more complex deployments.

Kubernetes’s declarative approach also facilitates easier management and reproducibility of deployments.

Docker and Kubernetes Strengths and Weaknesses

| Feature | Docker | Kubernetes |

|---|---|---|

| Image Management | Excellent at creating and managing container images | Manages the entire lifecycle of containerized applications |

| Orchestration | Limited orchestration capabilities | Highly capable container orchestration |

| Scaling | Scaling is possible, but limited without external tools | Automatic scaling based on demand |

| Resource Management | No resource management features | Effective resource management and allocation |

| Complexity | Simpler to learn | Steeper learning curve |

Advantages of Docker and Kubernetes Integration

The combination of Docker and Kubernetes provides several advantages:

- Simplified Deployment: Docker images make it easier to package and deploy applications, while Kubernetes handles the complexities of scaling and managing those deployments.

- Enhanced Scalability: Kubernetes automatically scales applications based on demand, ensuring optimal performance under varying workloads.

- Improved Resource Utilization: Kubernetes efficiently allocates resources, preventing over-utilization or under-utilization.

- Robust Management: Kubernetes provides robust mechanisms for managing the entire application lifecycle, from deployment to updates and rollbacks.

Disadvantages of Docker and Kubernetes Integration

While powerful, integrating Docker and Kubernetes has potential drawbacks:

- Steep Learning Curve: Both Docker and Kubernetes require a learning curve, which can be a barrier for new users.

- Complexity: Managing complex deployments can be challenging with a large number of interconnected services.

- Resource Requirements: Kubernetes requires dedicated infrastructure, which can be a significant cost factor.

Container Orchestration Use Cases

Container orchestration platforms like Docker and Kubernetes empower developers to manage and deploy containerized applications at scale. This allows for efficient resource utilization, improved application reliability, and enhanced scalability. Moving beyond basic containerization, orchestration automates complex tasks like scaling applications up or down based on demand, ensuring high availability, and facilitating continuous integration and continuous delivery (CI/CD) pipelines.

This dynamic approach dramatically simplifies the deployment and management of applications, especially in modern cloud-native environments.

Real-World Applications of Container Orchestration

Container orchestration is highly beneficial in various real-world applications. For example, e-commerce platforms can utilize container orchestration to handle peak traffic during shopping seasons. Microservice architectures, where applications are broken down into smaller, independent services, are ideally suited for container orchestration. This modular approach allows for efficient scaling and maintenance of individual services. Furthermore, container orchestration plays a vital role in cloud-based applications where flexibility and scalability are paramount.

Setting Up a Basic Kubernetes Cluster

Setting up a basic Kubernetes cluster for a microservice application involves several key steps. First, choose a suitable Kubernetes distribution (e.g., Minikube, Docker Desktop). Next, install the chosen distribution on your local machine or a cloud provider. Configure the cluster to your specific needs, such as resource limits and network configurations. Crucially, define the application’s deployment configuration, including the Docker images, resources, and desired number of replicas.

Finally, deploy the application to the cluster, and verify that it functions as expected. Kubernetes’ declarative nature makes managing the cluster straightforward.

Improving Application Scalability and Reliability

Container orchestration significantly improves application scalability and reliability. Orchestration tools automatically scale applications up or down based on demand. This ensures the application remains responsive even during periods of high traffic. Moreover, container orchestration provides robust mechanisms for fault tolerance, automatically restarting containers that fail. This ensures continuous operation and minimizes downtime.

In addition, container orchestration manages the complex task of distributing applications across multiple nodes in a cluster, thereby enhancing overall application availability and reliability.

Container Orchestration in CI/CD Pipelines

Container orchestration is seamlessly integrated into CI/CD pipelines. Docker images are built and tested automatically within the pipeline. After successful testing, these images are pushed to a container registry, such as Docker Hub or a private registry. Finally, Kubernetes deployments are configured to pull these images and deploy them to the cluster. This automation significantly reduces the time needed to deploy and update applications.

The use of container images within CI/CD pipelines simplifies the process of managing dependencies and ensures consistent deployments across environments.

Scenarios Requiring Container Orchestration

Container orchestration is crucial in several scenarios. For instance, applications with high traffic demand require the ability to scale quickly and efficiently. Microservice architectures, with their distributed nature, benefit greatly from container orchestration to manage the interactions between services. Cloud-native applications, designed for dynamic environments, necessitate the agility provided by container orchestration. Furthermore, deployments requiring high availability and fault tolerance are ideal candidates for container orchestration.

Continuous delivery and deployment pipelines, where automation is paramount, rely on container orchestration for efficient and reliable execution. Organizations with distributed teams benefit from the centralized management of containerized applications provided by container orchestration.

Container Security Considerations

Containerization, while offering significant advantages in terms of portability and efficiency, introduces new security challenges. Proper security measures are paramount to protect applications and data running within containers, and to ensure the integrity of the entire infrastructure. A robust security posture needs to be integrated throughout the entire container lifecycle, from image creation to deployment and management.A comprehensive security approach involves proactive identification and mitigation of vulnerabilities, rigorous validation of container images, and continuous monitoring of containerized applications.

This proactive approach reduces the risk of exploits and unauthorized access to sensitive data. Effective security measures not only protect the containerized applications but also enhance the overall security posture of the system.

Common Security Risks in Container Environments

Container security faces several unique challenges compared to traditional server-based deployments. These include vulnerabilities in the container image itself, potential attacks on the container runtime, and insufficiently secured orchestration layers.

- Image-level vulnerabilities: Malicious code or compromised dependencies embedded within the container image can be exploited by attackers to gain unauthorized access or manipulate application behavior. This is a significant concern as container images are often built from a multitude of components, making comprehensive security scanning crucial.

- Container runtime attacks: Vulnerabilities in the container runtime environment can be exploited to compromise containers. Attackers may attempt to escalate privileges within the container or use it as a launchpad for further attacks.

- Orchestration layer vulnerabilities: Kubernetes, as a container orchestration platform, has its own security considerations. Misconfigurations or vulnerabilities in Kubernetes deployments can expose the entire cluster to potential threats. Inadequate network configurations or unauthorized access to the control plane can lead to widespread compromise.

- Data breaches: Sensitive data exposed within containers can be targeted by attackers. Protecting data at rest and in transit within the containerized environment is critical.

Securing Docker Images

Securing Docker images involves a multi-layered approach to ensure their integrity and freedom from vulnerabilities.

- Image scanning: Regular scanning of Docker images for known vulnerabilities is essential. Tools like Docker Bench, Clair, and Anchore Engine can identify and categorize vulnerabilities within the image’s base layers, dependencies, and runtime environments.

- Dependency management: Carefully managing dependencies in the Dockerfile is crucial. Utilizing a dedicated package manager and restricting the usage of vulnerable packages is vital to preventing vulnerabilities.

- Multi-stage builds: Employing multi-stage builds in Docker can reduce the size of the final image and improve security. This approach minimizes the number of packages and libraries included, thereby reducing the attack surface.

- Security hardening: Applying security best practices during the image build process is vital. This includes using strong passwords for the container user and restricting unnecessary ports and services.

Securing Kubernetes Deployments

Kubernetes security involves hardening the control plane, securing the container runtime, and controlling access to the cluster.

- Network policies: Implementing network policies to restrict traffic flow between containers and pods is critical to prevent unauthorized communication and data breaches. This isolation mechanism limits the impact of a compromised container.

- Role-Based Access Control (RBAC): Implementing RBAC in Kubernetes provides granular control over user permissions and access to resources. This approach limits the actions that users can perform within the cluster, mitigating the impact of unauthorized access.

- Secret management: Securely storing and managing sensitive information, such as API keys and passwords, is essential. Kubernetes Secrets are designed to manage these credentials securely. This prevents exposure of secrets in configuration files or container images.

- Regular security audits: Conducting regular security audits of Kubernetes deployments can identify vulnerabilities and misconfigurations that could be exploited by attackers.

Container Image Scanning and Vulnerability Management

Container image scanning is a crucial step in preventing vulnerabilities.

- Automated scanning: Tools like Anchore Engine, Trivy, and Clair automatically scan container images for known vulnerabilities. These tools analyze the image’s layers, dependencies, and runtime environment to identify potential threats.

- Vulnerability reporting and remediation: Automated scanning tools generate reports detailing identified vulnerabilities. These reports provide insights into the nature and severity of the vulnerabilities. Actionable remediation steps and prioritization based on severity are crucial to address these issues.

- Continuous monitoring: Implement continuous monitoring of container images to detect new vulnerabilities as they emerge. This ensures the ongoing security of the containerized environment.

Security in Container Orchestration

Security is integral to the container orchestration process.

- Security hardening of the control plane: Securing the Kubernetes control plane through proper authentication and authorization mechanisms, network segmentation, and intrusion detection/prevention systems is crucial. This safeguards the core of the orchestration system.

- Regular updates and patching: Keeping Kubernetes and its components updated with the latest security patches is vital to mitigate known vulnerabilities. This proactive approach minimizes the attack surface.

Final Review

In summary, Docker Kubernetes containers explained showcases the power and versatility of containerization and orchestration. From building and deploying applications with Docker to managing and scaling them with Kubernetes, this guide provides a comprehensive understanding of these technologies. By mastering Docker and Kubernetes, developers can achieve greater efficiency, scalability, and reliability in their application deployments, ultimately leading to more robust and maintainable systems.