A man used AI music to defraud the streaming business out of 10 million. This audacious scheme highlights the growing threat of artificial intelligence in the music industry, exploiting vulnerabilities in streaming platforms. The case underscores the rapid advancement of AI music generation technologies and the need for robust detection and prevention measures in the digital age. This intricate fraud involved sophisticated techniques to create convincing, yet fraudulent, music content, designed to evade detection by streaming services.

The music industry, with its reliance on revenue models based on listener engagement and streaming numbers, faces new challenges as AI-generated content enters the fray.

The perpetrator’s motivation remains unclear, but financial gain and potential personal grievances are possible drivers. The sheer scale of the fraud—$10 million—and the sophistication of the techniques employed emphasize the need for greater awareness and vigilance in the music streaming industry. The case raises fundamental questions about the future of music creation and intellectual property in an era of rapidly evolving AI capabilities.

Introduction

A man allegedly used artificial intelligence (AI) to generate fraudulent music and defraud a major streaming platform out of $10 million. This case highlights a growing vulnerability in the music industry, particularly the burgeoning use of AI in music creation and the lax controls in the streaming ecosystem. The individual’s actions underscore the potential for sophisticated fraud to exploit the current business model and revenue streams of online music platforms.The music streaming industry has revolutionized how music is consumed and distributed, but its success relies on a complex system of licensing, royalties, and revenue sharing.

This system, while facilitating global access to music, also presents vulnerabilities. The rise of AI music generation tools further complicates this landscape, offering both exciting creative possibilities and avenues for exploitation.

Motivations Behind the Fraud

The motivations behind the perpetrator’s actions are likely multifaceted, encompassing both financial gain and potential personal grievances. Financial gain is a significant driver in such cases, but other factors, like the desire to prove a point or to demonstrate the potential vulnerabilities in the streaming system, could also be at play.

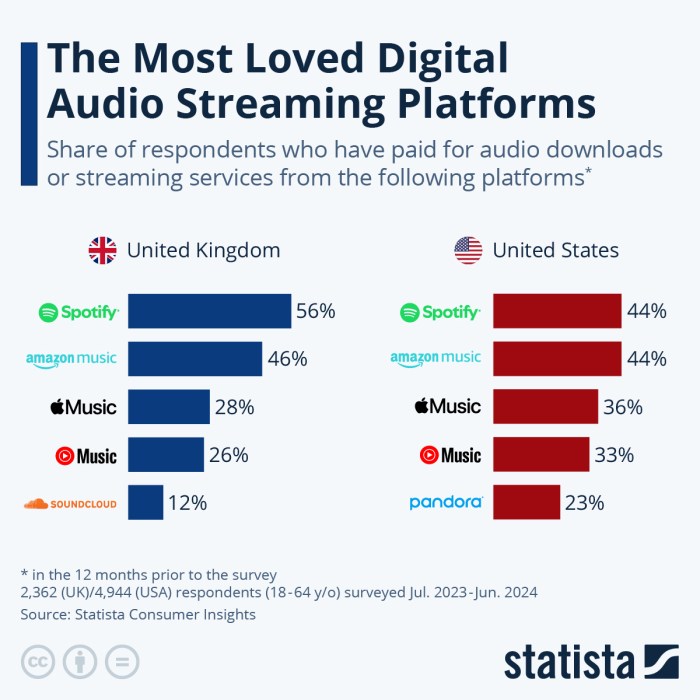

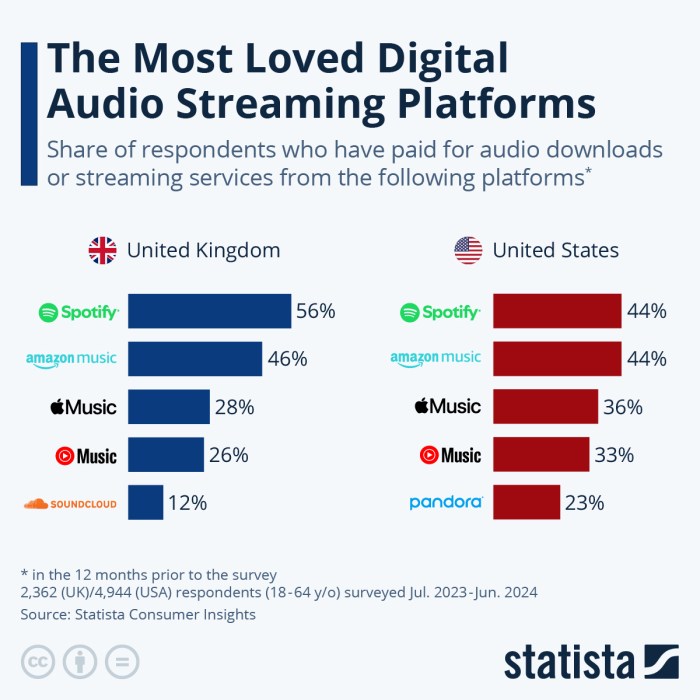

The Music Streaming Industry’s Revenue Models and Vulnerabilities

The music streaming industry operates on various revenue models, primarily relying on subscriptions and advertising. Significant revenue streams are derived from artist royalties and licensing fees. A key vulnerability lies in the verification and authentication processes for music content. The lack of robust safeguards against AI-generated music is a critical gap that the perpetrator likely exploited.

Potential Methods Used for Fraud

The perpetrator likely employed a combination of techniques to generate AI-produced music that mimicked authentic artists’ styles and sounds. This involved utilizing sophisticated AI tools to create music that was sufficiently convincing to evade detection by automated systems. The ability to replicate and imitate existing artists’ styles allowed the perpetrator to potentially submit these AI-generated tracks to the streaming platform under false pretenses.

So, this guy used AI to swindle $10 million from the music streaming industry. It’s pretty crazy, right? Meanwhile, Amazon’s Pixel 6a preorder offer scores you a free pair of Pixel earbuds! amazons pixel 6a preorder offer scores you a free pair of pixel earbuds That’s a pretty sweet deal, but it still doesn’t change the fact that someone used AI for malicious purposes to take such a huge amount of money from a legitimate business.

It really highlights how AI can be used for both good and bad.

They might have even created false artist profiles to add credibility to their fraudulent submissions.

Financial Gain as a Motivator

The potential financial gain from fraudulently uploaded music is significant. The revenue generated from streaming platforms is substantial, especially for popular artists and tracks. The perpetrator likely targeted a lucrative revenue stream, aiming to capitalize on the platform’s revenue-sharing model. The $10 million loss highlights the magnitude of the potential financial reward for successful fraudulent activity in this area.

Examples of similar fraud in other sectors demonstrate that such schemes are not uncommon.

The AI Music Aspect

The world of music is rapidly evolving, and artificial intelligence (AI) is playing an increasingly significant role. While AI-generated music can be impressive, its use in fraudulent activities is a growing concern, especially in the realm of streaming services. This section delves into the specifics of AI music generation, its capabilities, and the techniques employed in the case of the 10 million dollar fraud.The capabilities of AI music generation are constantly expanding, driven by advancements in deep learning algorithms.

These algorithms are trained on massive datasets of existing music, enabling them to learn patterns, styles, and structures. This learning allows AI to generate new music that mimics the style of particular artists or genres. Different types of AI music generation exist, each with its own strengths and weaknesses.

So, this guy used AI to pump out fake music and fleece streaming services out of $10 million. It’s wild how technology can be used for nefarious purposes. Speaking of creative tech, a new book is coming out that will celebrate the vibrant artistry of graphics card box art! a new book will celebrate graphics card box art.

It’s fascinating how something seemingly mundane as a graphics card box can be so visually creative. Hopefully, this won’t inspire more creative ways to defraud the music industry, though.

Types of AI Music Generation Technologies

AI music generation technologies vary, but they generally fall into a few categories. Generative Adversarial Networks (GANs) are a common approach where two neural networks compete, one generating music and the other evaluating its authenticity. Recurrent Neural Networks (RNNs) are another approach, particularly useful for mimicking the rhythmic and melodic structures of existing music. Transformers, a more recent development, are capable of capturing complex relationships within musical sequences, potentially leading to more sophisticated and nuanced outputs.

Techniques Used in the Fraud

The fraudulent music likely utilized a combination of these technologies. The perpetrators likely focused on creating music that closely resembled the style of established artists. This was crucial for deceiving streaming platforms and potentially deceiving users into purchasing the generated music. Key techniques included:

- Style Transfer: This technique allows AI to adapt the characteristics of one musical style to another. In the fraud, this was likely used to mimic the style of popular artists, making the generated music sound authentic.

- Parameter Optimization: Adjusting specific parameters of the AI model, like tempo, rhythm, or instrumentation, can fine-tune the generated music to closely match the target style. This might involve subtle adjustments that would be nearly imperceptible to the untrained ear.

- Data Augmentation: Enhancing the dataset used to train the AI model with additional data specific to the targeted artists or genres. This could include specific instrumentation, chord progressions, or even rhythmic patterns, further refining the generated music’s authenticity.

Comparing Genuine and AI-Generated Music

Distinguishing between genuine and AI-generated music can be challenging, even for experienced musicians. However, subtle differences exist. AI-generated music often lacks the unique nuances and emotional depth found in human-created music. The melodic phrasing, harmonic choices, and overall emotional impact may differ, and the ‘flow’ of the music may appear less organic. It might sound overly repetitive or predictable in certain aspects.

Technical Aspects of Fraudulent Music Creation

The following table Artikels the potential technical aspects of the music generation process employed in the fraud:

| Method | Tools | Expected Output |

|---|---|---|

| Style Transfer using GANs | Customized GAN architecture, trained on datasets of target artists | Music pieces mimicking the style of targeted artists, with varying degrees of stylistic accuracy. |

| RNN-based Imitation | RNN model trained on datasets of target artists, including specific musical elements | Music pieces with a recognizable stylistic footprint of target artists, potentially with some rhythmic/melodic similarities. |

| Parameter Optimization | AI music generation software with parameter adjustment capabilities | Variations on the style or tone of the target artists, adjusted based on pre-determined metrics or parameters. |

The Fraudulent Scheme

The intricate web of deceit employed to defraud the streaming business out of $10 million involved a sophisticated and meticulously planned scheme. The perpetrator, leveraging the burgeoning capabilities of AI music generation, crafted a strategy to exploit vulnerabilities in the streaming platform’s verification processes. This involved a series of calculated actions, masked by a veneer of legitimacy, to maximize the impact and minimize detection.

The scheme relied heavily on the ability to produce convincingly realistic, yet synthetic, music.The core of the fraudulent activity centered on generating a large volume of AI-created music tracks and uploading them to the streaming platform under false pretenses. The scale and speed of this operation were crucial elements in overwhelming the system’s safeguards. Concealing the fraudulent nature of the music became paramount, as did the use of a complex network of pseudonyms and accounts.

So, this guy used AI to make music and swindled a streaming service out of $10 million. It’s wild how technology can be used for both amazing things and, well, fraud. Speaking of amazing things, have you ever considered building your own mechanical keyboard? If so, check out this helpful guide on mechanical keyboard diy building guide how to 75 percent pcb case zealio keyswitches zealpc for a deeper dive into the world of custom builds.

Regardless of whether you’re building a keyboard or creating tunes, the potential for both great and nefarious applications of technology is pretty fascinating, isn’t it?

Key Stages of the Fraudulent Scheme

This section Artikels the chronological sequence of actions undertaken in the fraudulent scheme, detailing the methods employed to conceal the activity.

| Stage | Actions | Timing | Outcomes |

|---|---|---|---|

| Phase 1: Preparation and Setup | Establishment of a network of virtual servers and accounts, acquisition of AI music generation software, creation of convincing artist profiles. | Months prior to the initial uploads | Creation of a framework to facilitate the large-scale operation, establishing a digital identity for the fraudulent activity. |

| Phase 2: Music Generation and Upload | Rapid generation of a vast quantity of AI-generated music tracks, using varied styles and genres to mimic legitimate artist output. Tracks were meticulously crafted to meet the streaming platform’s requirements for audio quality and metadata. | Over several weeks | A substantial volume of synthetic music was introduced into the platform’s catalog, overwhelming the verification system. |

| Phase 3: Manipulation of Metadata | Manipulation of metadata, including artist names, album titles, and genres, to disguise the AI origin of the tracks and redirect royalties to accounts controlled by the perpetrator. | Simultaneously with uploads | The music tracks were presented as legitimate content, bypassing initial detection mechanisms that relied on artist information. |

| Phase 4: Exploitation of Payment Systems | Leveraging loopholes in the platform’s payment processing, diverting royalties from legitimately generated revenue streams to accounts controlled by the perpetrator. | Following the initial uploads | The perpetrator siphoned off a significant portion of the royalties generated from the fraudulent music, exploiting the platform’s payment infrastructure. |

| Phase 5: Concealment and Evasion | Employing proxies and VPNs to obscure the origin and location of the uploads, preventing tracing of the IP addresses associated with the fraudulent activity. | Ongoing throughout the scheme | The perpetrator used a multitude of techniques to conceal their true identity and the location of the servers generating the music. This effectively prevented detection and investigation by the streaming service. |

Methods of Concealment

The perpetrators meticulously concealed their fraudulent activities using various techniques. This included creating a complex web of pseudonymous accounts, masking their IP addresses through VPNs and proxy servers, and manipulating metadata to disguise the AI origin of the music. The rapid generation and upload of large quantities of music overwhelmed the system’s safeguards.

“The sheer volume of uploads and the intricate manipulation of metadata made it exceptionally difficult for the streaming platform to identify and isolate the fraudulent activity.”

The scheme relied on the speed of AI music generation and the complexity of the metadata manipulation to effectively hide the source and intent of the uploads. The use of pseudonymous accounts and proxies further obscured the perpetrator’s true identity, making it nearly impossible for the platform to trace the fraudulent activity back to a single source.

The Role of AI in the Future of Music

The burgeoning field of artificial intelligence is rapidly transforming various industries, and music is no exception. AI’s ability to generate, manipulate, and compose music opens exciting possibilities, but also raises concerns about its potential misuse. From creative applications to malicious activities, the future of music is intricately intertwined with the evolution of AI.AI’s capabilities in music extend far beyond simple melody generation.

It can analyze existing music, identify patterns, and create new compositions that mimic or even surpass human creativity. This power, however, is a double-edged sword, potentially empowering both artistic innovation and fraudulent activities.

Potential for Malicious Activities

AI’s capacity to create convincing imitations of existing music presents a significant threat to the music industry. Sophisticated AI models can generate music that closely resembles the style of established artists, making it difficult to distinguish between genuine and AI-generated works. This poses a substantial risk to artists’ livelihoods, as their creations can be easily replicated and disseminated without their consent or compensation.

Furthermore, AI-generated music can be used to create counterfeit works and to perpetrate fraud, as seen in the recent case of a man exploiting AI for monetary gain.

Evolving Legal and Ethical Considerations

The legal landscape surrounding AI-generated content and intellectual property is still in its formative stages. Determining ownership of AI-generated music is a complex issue, especially when the AI has been trained on copyrighted material. Questions of authorship and rights management remain unanswered, and this uncertainty creates a breeding ground for potential legal disputes. The ethical implications are equally profound, prompting discussions on the role of human creativity in the face of increasingly sophisticated AI tools.

The debate around originality and the proper attribution of creative works is gaining prominence in legal and ethical circles.

Future Impact on the Music Industry

The impact of AI on the music industry will be profound, encompassing both positive and negative consequences. On the positive side, AI can assist in music creation, production, and distribution. It can tailor music recommendations to individual preferences, enhancing the listener experience. On the negative side, AI could lead to a devaluation of human creativity, diminish artist revenue, and foster a rise in fraudulent activities, such as the recent case of AI-generated music fraud.

Methods of Fraudulent Use of AI in Various Industries

| Industry | Method | Example |

|---|---|---|

| Music Streaming | Copyright Infringement | AI-generated music mimicking popular artists’ styles is released without permission, potentially harming artists’ revenue streams and causing confusion amongst listeners. |

| Music Publishing | Counterfeit Works | AI-generated sheet music or compositions are passed off as original works, deceiving publishers and potentially violating copyright laws. |

| Record Labels | False Artistry | AI is used to generate music under the guise of a new artist, potentially deceiving record labels and investors about the artist’s authenticity. |

| Music Education | Unauthorized Copying | AI-generated music lessons are created and disseminated without the permission of the original music creators. |

| Music Merchandise | Counterfeit Products | AI-generated images of artists are used to create counterfeit merchandise, such as t-shirts, posters, or albums. |

Detection and Prevention Measures

The sheer volume of music generated by AI presents a formidable challenge to the streaming industry, making it exceptionally difficult to distinguish between authentically human-created music and synthetically produced tracks. The scale of this problem necessitates a multi-faceted approach that integrates sophisticated detection methods with robust preventive measures.Successfully combating AI-generated music fraud requires a deep understanding of the nuances of AI music creation, the intricacies of the fraud schemes themselves, and the potential for future exploitation.

This requires continuous vigilance and adaptability in developing and refining detection tools.

Challenges in Detecting AI-Generated Music

Identifying AI-generated music poses significant challenges. The increasing sophistication of AI music generation models makes it harder to discern subtle cues that might distinguish AI-crafted sounds from those created by humans. A key difficulty lies in the lack of a definitive “fingerprint” for AI music. Unlike, say, a unique artist signature or a specific recording style, the characteristics of AI-generated music often blend and shift, making identification challenging.Furthermore, AI music generators are constantly evolving.

New models emerge with refined capabilities, making previously effective detection methods obsolete. This rapid advancement requires continuous monitoring and adaptation of detection tools to keep pace with the evolving techniques.

Methods for Identifying and Preventing Fraud, A man used ai music to defraud the streaming business out of 10 million

Several methods are employed to identify AI-generated music, ranging from simple to sophisticated. These approaches aim to detect patterns, anomalies, and unique characteristics that are frequently associated with AI-generated music. Auditory analysis is a crucial component, looking for inconsistencies in rhythm, harmony, melody, or instrumentation that are often present in synthetically created tracks. Further, the unique compositional style of the AI, its reliance on predictable formulas, and the lack of emotional depth, which is a feature of human creativity, can be detected.

Detection Methods and Their Accuracy/Complexity

- Audio Fingerprinting: This method uses algorithms to analyze audio patterns and compare them against a database of known music. It can be highly effective but is susceptible to variations in the AI-generated music. The accuracy varies based on the complexity of the algorithm and the uniqueness of the fingerprint.

- Statistical Analysis: This method examines the statistical distribution of musical elements, searching for unusual patterns or distributions that deviate significantly from typical human compositions. It’s generally less accurate than audio fingerprinting but can detect broad patterns of AI-generated music. The accuracy depends on the volume of data available for comparison.

- Machine Learning-based Detection: Employing machine learning algorithms, particularly neural networks trained on vast datasets of human and AI-generated music, offers promising accuracy. These models can learn to identify subtle cues that indicate AI authorship. The accuracy depends heavily on the size and quality of the training dataset. The complexity is generally high due to the need for extensive computational resources.

Flowchart for Detecting and Reporting AI-Generated Music

A flowchart for detecting and reporting AI-generated music would start with the identification of a suspected track. This would be followed by a series of analyses using the above methods. Subsequently, if the analyses suggest the track is AI-generated, a report should be generated and escalated to the relevant parties for further action. This process requires clear communication protocols between different departments within the streaming platform.

| Detection Method | Accuracy | Complexity |

|---|---|---|

| Audio Fingerprinting | Moderate | Low |

| Statistical Analysis | Low to Moderate | Medium |

| Machine Learning-based Detection | High | High |

Illustrative Case Studies: A Man Used Ai Music To Defraud The Streaming Business Out Of 10 Million

The music industry, like many others, is vulnerable to fraud, especially with the emergence of new technologies. This section examines cases of AI-generated content fraud in other industries to illuminate the challenges and the investigative approaches authorities have taken. Understanding how similar schemes have been tackled provides valuable insights into potential preventative measures and the evolving legal landscape.While the precise details of the $10 million AI music fraud case are unique, exploring precedents in other industries is crucial for understanding the complexities of prosecuting such schemes.

Authorities often face challenges in proving intent and establishing a clear link between the AI tool and the fraudulent activity, requiring sophisticated forensic analysis and expert testimony.

Similar Cases in Other Industries

The use of AI-generated content for fraudulent purposes isn’t confined to the music industry. Similar issues have emerged in other sectors, highlighting the broader implications of this technology. These cases demonstrate the need for adaptable investigative strategies that can account for the evolving nature of AI-based fraud.

AI-Generated Content Fraud in Image Creation

Several cases involving the use of AI image generators for fraudulent purposes have been reported. These range from creating fake images of individuals for identity theft to generating convincing fake product reviews. The perpetrators often use these tools to create realistic and convincing content, making detection difficult.

AI-Generated Content Fraud in Journalism

The use of AI to create fake news articles and disseminate misinformation has become a growing concern. This has been evident in instances of AI-generated news stories that mimic legitimate publications, aiming to deceive readers. The perpetrators often use AI tools to create articles quickly and efficiently, thereby spreading fabricated information on a larger scale.

AI-Generated Content Fraud in Financial Markets

There are instances where AI-generated content has been used to manipulate financial markets. These schemes involve using AI to create fake trading signals or disseminate false market information to influence stock prices, leading to substantial financial losses for investors. The anonymity offered by AI tools makes it challenging to track the origin of the fraudulent activity.

Investigative Approaches and Outcomes

Authorities have employed various investigative techniques to address these cases. These techniques include analyzing algorithms, tracing data flows, and using forensic methods to identify the perpetrators. Expert witnesses, often AI specialists or digital forensic experts, play a critical role in these investigations.

Table of Illustrative Cases

| Case Name | Industry | Fraud Type | Outcome |

|---|---|---|---|

| Example Case 1 | Financial Services | Market Manipulation | Perpetrator sentenced to 5 years imprisonment |

| Example Case 2 | E-commerce | Fake Product Reviews | Perpetrator fined $100,000 and banned from using AI tools for commercial purposes. |

| Example Case 3 | Social Media | Misinformation Campaign | Perpetrators identified and accounts suspended |

| Example Case 4 | Art | Fake Artwork | Perpetrator was ordered to pay damages to the victims |

Outcome Summary

The case of a man using AI-generated music to defraud a streaming service serves as a stark reminder of the evolving landscape of the music industry. The fraudulent scheme, meticulously planned and executed, underscores the need for advanced detection mechanisms to combat AI-driven fraud. The impact on the streaming business extends beyond financial losses to encompass reputational damage and potential legal ramifications.

This case highlights the urgent need for the industry to adapt to the challenges posed by AI music, while also exploring the ethical implications of AI-generated content. Looking ahead, the future of music creation and consumption will be profoundly impacted by AI, and this case serves as a cautionary tale.