Self driving car ethics dilemma mit study moral machine results – Self-driving car ethics dilemma MIT study moral machine results unveils the fascinating and complex choices facing the development of autonomous vehicles. The MIT Moral Machine project, a groundbreaking study, delves into the ethical dilemmas that arise when a self-driving car must make split-second decisions in unavoidable accident scenarios. By analyzing participant responses, the study offers a unique glimpse into the values and priorities of a diverse population, revealing potential biases and inconsistencies in our moral judgments.

This study explores various accident scenarios, ranging from pedestrian versus cyclist to vehicle versus vehicle collisions. The methodology involved presenting these scenarios to a global audience, prompting them to choose the outcome they deemed most morally acceptable. The results offer crucial insights into the societal implications of programming ethical choices into autonomous vehicles, and how public perception shapes these considerations.

By examining different ethical frameworks, the study provides valuable data for engineers and policymakers.

Introduction to Self-Driving Car Ethics: Self Driving Car Ethics Dilemma Mit Study Moral Machine Results

The future of transportation hinges on the seamless integration of self-driving cars. However, this technological advancement presents a host of complex ethical dilemmas that demand careful consideration. The “Moral Machine” project, a pioneering MIT study, delves into these challenges, exploring how autonomous vehicles should make life-or-death decisions in unavoidable accident scenarios. Understanding these dilemmas is crucial for shaping responsible AI development and ensuring public trust in this transformative technology.The “Moral Machine” project, a groundbreaking study from MIT, aimed to understand public perceptions and preferences regarding ethical decision-making in autonomous vehicles.

This initiative sought to gauge the public’s moral compass in the face of complex scenarios, identifying shared values and potential biases in ethical judgment. The project’s methodology involved presenting participants with a series of hypothetical scenarios involving unavoidable accidents, prompting them to choose between different potential outcomes. This data collection provided insights into the public’s ethical framework when faced with the challenging task of making life-altering decisions.

Ethical Dilemmas in Autonomous Vehicles

Autonomous vehicles, while promising greater safety and efficiency, introduce novel ethical considerations. The absence of a human driver necessitates the development of algorithms to make rapid and complex decisions in critical situations. These situations often involve trade-offs between different values, such as human life, property, or other factors. The critical challenge lies in defining how these algorithms should prioritize competing interests.

Methodology of the MIT Study

The MIT study employed a unique methodology to collect data and understand public perspectives. Participants were presented with a series of carefully crafted scenarios involving accidents where a self-driving car had to choose between different courses of action. Each scenario presented a range of potential outcomes, allowing participants to make informed choices based on their own ethical frameworks. These scenarios were designed to explore various dimensions of ethical considerations in autonomous vehicles, including the potential impact on different types of people, objects, or circumstances.

Scenarios Explored in the Study

The study explored a diverse range of scenarios, mirroring the complexity of real-world driving situations. These scenarios were carefully crafted to simulate real-world situations and encompass a wide range of potential outcomes. The project considered a broad range of factors, from the ages and health conditions of the individuals involved to the nature of the damage or loss of life involved in each scenario.

Types of Accidents or Incidents

The “Moral Machine” project identified various accident scenarios, each presenting unique ethical dilemmas. Understanding these scenarios is essential for designing ethical algorithms for self-driving cars.

| Scenario Type | Description |

|---|---|

| Pedestrian vs. Vehicle | A pedestrian steps into the path of a self-driving vehicle. The vehicle must choose between hitting the pedestrian or swerving into another object. |

| Vehicle vs. Vehicle | A self-driving vehicle must choose between colliding with another vehicle or swerving into a barrier. |

| Vehicle vs. Cyclist | A self-driving vehicle must decide between hitting a cyclist or swerving into another object. |

| Vehicle vs. Animal | A self-driving vehicle encounters an animal in its path. The vehicle must choose between hitting the animal or another object. |

| Multiple Victims | A self-driving vehicle faces a situation where multiple individuals or objects are at risk. The vehicle must make a choice that minimizes the harm or damage. |

Analyzing Moral Machine Results

The “Moral Machine” study, a massive online experiment exploring ethical dilemmas in autonomous vehicles, yielded fascinating insights into how different demographics weigh the value of human lives in complex scenarios. Its results offer a unique opportunity to understand public perception of automated decision-making and its potential impact on future autonomous vehicle design. The study’s methodology involved presenting participants with various accident scenarios and asking them to choose who should be sacrificed to prevent the greater harm.

The data collected allows for a deeper understanding of the ethical frameworks guiding these decisions.Analyzing these results reveals significant trends in how people prioritize different values in the face of unavoidable accidents. The study highlights the influence of individual perspectives and cultural factors on ethical judgment, suggesting that a universal ethical framework for autonomous vehicles might be challenging to achieve.

Ultimately, understanding these patterns is critical for developing autonomous vehicles that align with societal values and promote trust.

Demographic Comparisons in Moral Machine Results

The study revealed noticeable differences in choices across various demographics. Factors like age, gender, and geographic location influenced participant decisions, underscoring the multifaceted nature of ethical considerations in accident scenarios. For example, younger participants often prioritized saving the greater number of lives, while older participants demonstrated a higher propensity to favor protecting vulnerable individuals.

Trends and Patterns in Participant Choices

Participants exhibited several recurring patterns in their choices. A significant trend involved prioritizing the preservation of human life over the minimization of harm. This suggests a general societal preference for saving lives, even when facing difficult trade-offs. Another consistent pattern involved a preference for saving the young over the elderly, a preference for saving women over men, and a preference for saving those in a car over those on a bicycle.

Further, cultural influences played a role in these choices.

Ethical Frameworks Underpinning Participant Choices

The choices made by participants in the “Moral Machine” study often reflect different ethical frameworks. Utilitarianism, emphasizing the maximization of overall well-being, was evident in scenarios where sacrificing one life could save multiple lives. Deontology, focusing on adherence to moral rules and duties, was also apparent, especially when choices involved protecting vulnerable groups. A mix of these and other ethical frameworks, including virtue ethics, potentially shaped participants’ decisions, highlighting the complexity of moral judgments.

Frequent Choices in Presented Scenarios

A common choice across scenarios involved prioritizing the greater number of lives. When faced with the choice between saving a group of pedestrians or a group of drivers, participants frequently chose to save the greater number of pedestrians. This suggests a preference for maximizing overall well-being. In scenarios involving vulnerable individuals like children or pregnant women, participants often prioritized their safety.

These trends reflect the common human instinct to mitigate harm.

The MIT Moral Machine study on self-driving car ethics dilemmas is fascinating, forcing us to confront tough choices about who gets priority in unavoidable accidents. While this highlights the complex ethical considerations in AI, it’s worth noting that the political landscape also throws up thorny questions, like the Paul Ryan, Donald Trump, and Obama wiretap claim denial debate.

This controversy , in a way, mirrors the ethical choices programmed into autonomous vehicles. Ultimately, both the MIT study and the political discussion force us to grapple with difficult moral questions and their implications for our future. The self-driving car dilemma continues to be a critical area of research.

Ethical Frameworks in Different Scenarios

| Scenario | Frequent Choices | Underlying Ethical Framework |

|---|---|---|

| Pedestrians vs. Drivers | Saving more pedestrians | Utilitarianism |

| Young vs. Elderly | Saving the young | Deontology/Virtue Ethics |

| Men vs. Women | Saving women | Deontology/Virtue Ethics |

| Cars vs. Bicycles | Saving those in cars | Utilitarianism/Deontology |

Ethical Frameworks and Dilemmas

Self-driving cars promise a safer future on the road, but their development raises complex ethical questions. As these vehicles navigate increasingly intricate situations, they must make split-second decisions in the face of unavoidable accidents. These decisions aren’t just about physics; they’re about values, and the frameworks we use to define those values will fundamentally shape the future of autonomous vehicles.The challenge lies in translating human ethical principles into algorithms.

Different philosophical frameworks offer contrasting perspectives on how to weigh competing values in these situations. Understanding these frameworks is crucial for developing ethical guidelines for self-driving cars that align with societal values.

The MIT study on self-driving car ethics, the Moral Machine, revealed fascinating insights into how people prioritize different scenarios. Thinking about how a self-driving car should react in various accidents really forces us to confront tough questions. Finding a way to program a car to choose between different lives, or prioritize different values, is complex. This, in a way, mirrors the need to understand how to effectively filter out irrelevant information when searching for a streamer’s Twitch, Mixer, or other platform channels, like trying to locate Shroud’s channel.

Ultimately, both scenarios, from self-driving car ethics to finding the right streamer, highlight the need for thoughtful consideration of the potential outcomes of our choices. The Moral Machine project continues to offer a fascinating glimpse into the future of automated decision-making, particularly in a world where technology is rapidly advancing. shroud twitch mixer channel search hide Understanding how we make these choices is crucial, especially in the context of autonomous vehicles.

Utilitarian Framework, Self driving car ethics dilemma mit study moral machine results

The utilitarian framework prioritizes maximizing overall well-being and minimizing harm. It focuses on the consequences of actions, seeking the outcome that benefits the greatest number of people. This approach is often seen as a mathematically oriented process, where the algorithm calculates the potential impact of various choices. A key component of the utilitarian framework is calculating the potential harm in the context of each potential outcome, weighing the risk of harm to the different people involved.

Deontological Framework

Conversely, the deontological framework emphasizes adherence to moral rules and duties. It judges actions based on their inherent rightness or wrongness, regardless of their consequences. This framework prioritizes the moral principles behind actions, often placing restrictions on certain actions, regardless of the overall good. This is often seen as a more inflexible approach compared to the utilitarian framework, but can offer clear guidelines in situations where certain actions are deemed universally wrong.

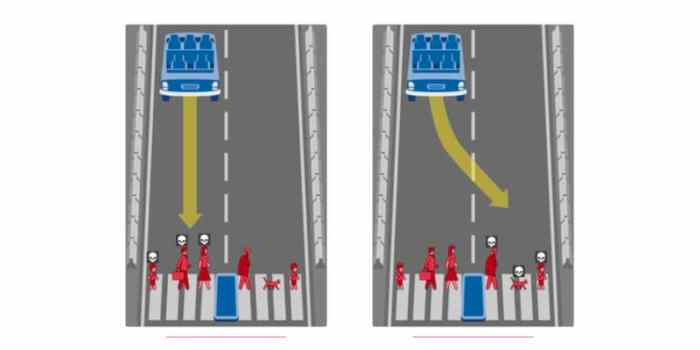

Comparing Frameworks in Accident Scenarios

Consider a scenario where a self-driving car must choose between hitting a pedestrian or swerving into a group of cyclists. A utilitarian approach might calculate the potential harm to each group and choose the action that minimizes the overall number of casualties. A deontological approach, however, might prioritize the sanctity of life and prohibit causing any harm, leading to a different choice, even if it results in a greater number of casualties.

Challenges in Applying Frameworks to Complex Dilemmas

Applying these frameworks to real-world scenarios presents significant challenges. The complexity of moral dilemmas often involves numerous factors, including the specifics of the situation, the age and health of those involved, and the context of the situation. Quantifying the value of human life is particularly difficult and raises ethical concerns.

The MIT study on self-driving car ethics, the Moral Machine, is fascinating, forcing us to confront tough choices. Thinking about scenarios where a crash is unavoidable, and who to prioritize, is a real head-scratcher. It’s a similar kind of dilemma to choosing between the charming, quirky worlds of Nintendo indie games like Yooka-Laylee and Stardew Valley, which offer unique challenges and moral choices, albeit in a much more lighthearted way.

Ultimately, the Moral Machine results highlight the complex considerations in building truly ethical AI systems.

Specific Dilemmas and Framework Applications

Imagine a scenario where a self-driving car faces a choice between hitting a pregnant woman and a healthy adult. A utilitarian approach might weigh the value of a potential life against the potential loss of a life with a high probability of further contributing to society, making a decision based on the projected future value. A deontological framework, however, might argue that taking a life is always wrong, regardless of the potential loss of another life.

| Ethical Framework | Core Principle | Example Decision in Dilemma |

|---|---|---|

| Utilitarian | Maximize overall well-being | Choose the action that minimizes the total harm, even if it means harming one person to save many. |

| Deontological | Adhere to moral rules and duties | Prioritize the sanctity of life and avoid harming anyone, regardless of the potential consequences. |

Societal Impact and Public Perception

The “Moral Machine” project, through its compelling dilemmas and global survey results, has significantly impacted public perception of self-driving cars. The study highlights the inherent ethical complexities in autonomous vehicles, forcing us to confront the potential for tragic choices and the need for clear guidelines. Understanding how the public perceives these choices is crucial for shaping the future of this technology.The varying ethical preferences revealed by the Moral Machine study underscore the need for open and transparent discussions about the values embedded within autonomous systems.

Different societies and individuals may prioritize different factors in these situations, and these variations must be accounted for when developing the algorithms that will make critical decisions. Public awareness and acceptance are vital for the responsible development and deployment of self-driving technology.

Public Perception of Self-Driving Cars

The Moral Machine results have revealed a global spectrum of ethical preferences, impacting public perception of autonomous vehicles. Some are reassured by the potential for improved safety, while others express concern about the algorithmic nature of decision-making and the potential for bias. The inherent uncertainty associated with these decisions, and the potential for catastrophic outcomes, fuels public apprehension.

Societal Implications of Different Ethical Choices

The ethical choices programmed into autonomous vehicles will have profound societal implications. These implications will vary depending on the specific priorities programmed into the algorithms. The choice of a particular ethical framework will impact how resources are allocated, and how society addresses the responsibility for accidents.

Summary of Potential Societal Implications

| Ethical Choice | Potential Societal Implications |

|---|---|

| Prioritizing the safety of the passenger | May lead to a perception of prioritizing personal safety over societal well-being, potentially impacting public trust. |

| Prioritizing the safety of the pedestrian | May lead to increased pedestrian safety but potentially reduce perceived personal safety for vehicle occupants. |

| Prioritizing the preservation of property | Could result in conflicting public opinion and a need for extensive societal discussion on the value of property versus human life. |

| Prioritizing the fewest casualties | Might be seen as the most ethically neutral option but could lead to complex discussions regarding the prioritization of different groups or types of casualties. |

Public Discourse and Input in Self-Driving Car Ethics

Public discourse and input are crucial in shaping the ethical guidelines for self-driving cars. The diversity of perspectives and values within a society needs to be reflected in the development of these systems. Public engagement can help address concerns and promote trust in the technology.

Potential for Bias in Ethical Decision-Making Algorithms

The algorithms that will govern autonomous vehicles could reflect existing societal biases. For example, if the training data predominantly reflects certain demographics or situations, the algorithm might inadvertently prioritize certain groups over others. This bias could result in unequal outcomes for different segments of the population. Care must be taken to mitigate these biases and ensure fairness in decision-making.

Ongoing monitoring and evaluation are vital to identify and rectify any discriminatory patterns.

Future Directions and Research

The ethical landscape of self-driving cars is rapidly evolving, demanding continuous research and adaptation. While significant progress has been made in developing the technology, the moral dilemmas inherent in these vehicles require ongoing investigation and dialogue. The “Moral Machine” study, while providing valuable insights, also highlights the complex and multifaceted nature of these choices. This necessitates a multidisciplinary approach that considers technological advancements, societal values, and legal frameworks.

Potential Research Directions

Future research on self-driving car ethics needs to expand beyond the current limitations of the “Moral Machine” methodology. This involves delving deeper into the psychological and societal factors that influence ethical decision-making, examining different ethical frameworks in diverse cultural contexts, and investigating the potential impact of various technological advancements. Analyzing real-world accident scenarios, simulated driving environments, and the long-term consequences of specific ethical guidelines is crucial.

Gaps in Current Knowledge

A significant gap in current knowledge centers around the long-term implications of autonomous vehicle deployment. How will societal values and ethical considerations evolve as self-driving cars become more prevalent? Furthermore, the study of human-machine interaction in high-stakes situations remains a crucial area of investigation. How can we design algorithms that are robust and resilient to unforeseen circumstances while still remaining ethically aligned with human values?

The dynamic nature of societal preferences also requires ongoing assessment to ensure that ethical guidelines remain relevant and adaptable.

Future Scenarios and Ethical Implications

The ethical dilemmas faced by self-driving cars are not static; they evolve with technological advancements. The following table illustrates potential future scenarios and their associated ethical implications:

| Scenario | Ethical Implications |

|---|---|

| Scenario 1: High-density urban traffic with limited infrastructure | The algorithm must prioritize the safety of pedestrians and cyclists in congested urban environments with limited infrastructure, potentially sacrificing the safety of the vehicle occupants. Ethical guidelines must account for variations in pedestrian behavior and urban design. |

| Scenario 2: Autonomous vehicles operating in extreme weather conditions | Algorithms must be programmed to prioritize safety during severe weather events, such as heavy snow or dense fog, potentially leading to trade-offs between the safety of occupants and other road users. |

| Scenario 3: Cybersecurity threats targeting autonomous vehicle systems | Ensuring the security of autonomous vehicle systems against cyberattacks is paramount. This necessitates robust security protocols to prevent malicious actors from manipulating the vehicle’s decision-making processes. Ethical implications of compromised vehicles need to be considered. |

Importance of Dialogue and Collaboration

Effective ethical guidelines for self-driving cars necessitate a collaborative effort. Researchers, engineers, ethicists, and the public must engage in ongoing dialogue to establish shared values and ethical frameworks. Public forums, workshops, and community discussions can foster a deeper understanding of the ethical implications of autonomous vehicles and promote the development of solutions that are acceptable to all stakeholders.

Technological Advancements

Technological advancements in areas like AI, machine learning, and sensor technology will significantly impact the development of self-driving car ethics. Advances in these fields may lead to more sophisticated algorithms that can better anticipate and respond to complex situations. For instance, the development of advanced sensor technology capable of detecting subtle cues or anticipating unexpected behaviors could dramatically alter the ethical considerations of autonomous vehicles.

Illustrative Scenarios and Examples

The Moral Machine project, through its diverse and compelling scenarios, forces us to confront the complex ethical choices inherent in autonomous vehicles. These aren’t abstract thought experiments; they represent real-world possibilities, demanding careful consideration of values and priorities. By exploring various accident scenarios, the study aims to illuminate the nuanced nature of ethical decision-making in a rapidly evolving technological landscape.The project’s creators designed these scenarios to probe our moral intuitions.

Each case presents a tragic dilemma, requiring participants to choose between different harms. Understanding these scenarios and the resulting choices allows us to analyze the potential strengths and weaknesses of different ethical frameworks, and their applicability to autonomous vehicle technology.

Accident Scenarios in the Moral Machine Study

The Moral Machine study employed a range of accident scenarios, designed to provoke a range of ethical responses. These scenarios weren’t simply hypothetical; they represented potential real-world situations, highlighting the complex factors that would need to be considered by self-driving car systems.

Ethical Conflicts in the Scenarios

The scenarios presented in the Moral Machine study frequently pitted one group of people against another, forcing participants to weigh the value of different lives and outcomes. These dilemmas illustrated the lack of clear-cut answers in many situations.

Illustrative Examples of Scenarios

- A scenario involving a pedestrian crossing the road outside a marked crosswalk. The autonomous vehicle must choose between hitting the pedestrian and swerving into another vehicle, potentially injuring or killing the occupants inside. The choice highlights the conflict between protecting the life of a pedestrian versus protecting the occupants of a vehicle. The dilemma is further complicated by the ambiguity surrounding the pedestrian’s actions and intentions.

- A scenario where an autonomous vehicle must choose between hitting a group of children or an elderly couple. This scenario forces a direct comparison between the value of young life and older life. The participants’ responses often reflect cultural or personal biases towards the relative value of different demographics. The dilemma becomes even more complex when considering potential long-term implications of the choice.

- A scenario where an autonomous vehicle is presented with the choice of hitting a pregnant woman or an elderly woman. This scenario raises questions about the value of a potential life versus the value of an existing one. The dilemma underscores the inherent subjectivity of ethical judgments, especially when life expectancy is a variable in the equation.

Table: Ethical Conflicts, Outcomes, and Participant Choices

| Scenario | Ethical Conflict | Potential Outcomes | Most Common Participant Choices |

|---|---|---|---|

| Pedestrian vs. Vehicle | Protecting pedestrian life versus protecting vehicle occupants | Pedestrian fatality or vehicle occupant fatality/injury | Varying across cultures and participant demographics |

| Children vs. Elderly | Weighing the value of young life against the value of older life | Children fatality or elderly fatality/injury | Often, a preference for children’s safety |

| Pregnant Woman vs. Elderly Woman | Balancing the value of a potential life against the value of an existing one | Pregnant woman fatality or elderly woman fatality/injury | Diverse, reflecting varying perspectives on life stages |

Factors Influencing Ethical Choices

- Cultural Values: Cultural backgrounds significantly impact ethical choices, as demonstrated by the varying preferences in different regions regarding the value of different groups. For instance, some cultures may prioritize the safety of children over adults.

- Demographic Factors: Age, gender, and socioeconomic status may influence how individuals perceive and weigh the risks in the scenarios.

- Personal Beliefs: Individual moral philosophies and beliefs about the value of human life shape participants’ choices in the scenarios. This is further demonstrated by variations in responses between participants.

- Situational Context: Factors like the speed of the vehicle and the proximity of other parties involved influence the perceived severity of the dilemma.

Conclusive Thoughts

The MIT Moral Machine study on self-driving car ethics dilemmas highlights the significant challenge of programming ethical decision-making into autonomous vehicles. The diverse responses reveal the complexity of human morality and the need for ongoing dialogue and collaboration among researchers, engineers, ethicists, and the public. The study underscores the importance of considering the societal impact and public perception surrounding these decisions.

Ultimately, this study compels us to confront the fundamental questions about how we want autonomous vehicles to navigate ethically complex situations.