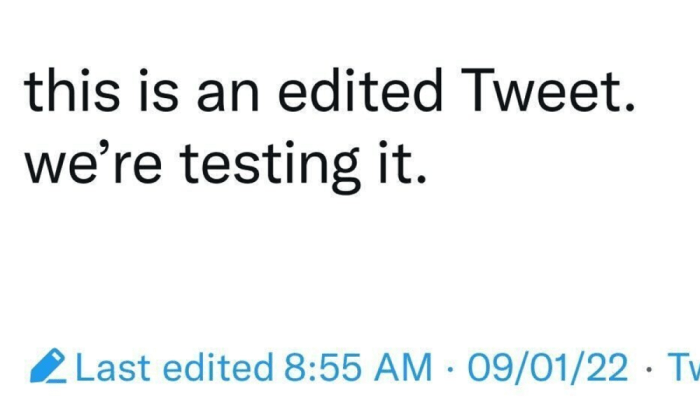

Twitter edit button blue test sets the stage for this enthralling narrative, offering readers a glimpse into a story that is rich in detail. We’ll explore the history of Twitter’s attempts at implementing an edit button, the public’s reaction to this test, the technical aspects of the blue button, and its potential impact on content moderation, misinformation, and user behavior.

It’s a fascinating look at how social media platforms are adapting to user feedback and the challenges of managing online content.

This experiment is more than just a tweak to a platform. It reflects broader conversations about the permanence of online communication, the responsibilities of social media companies, and the evolving expectations of users. We’ll delve into the specifics of this particular blue test, comparing it to similar features on other platforms, and examining the potential implications for the future of Twitter and social media in general.

Background of Twitter’s Edit Button Experiment

Twitter’s ongoing experiment with an edit button reflects a complex interplay of user demands, platform evolution, and internal strategies. The desire for a simple, reliable way to correct typos and unintentional errors has been a consistent user request. This desire, coupled with the increasing prevalence of social media in public discourse, has made the edit button a focal point for debate.

The experiment signals a potential shift in Twitter’s approach to content moderation and user interaction.The history of Twitter’s attempts to implement an edit button reveals a gradual progression from initial discussions to a more concrete testing phase. This evolution demonstrates a measured response to user feedback and a cautious approach to significant platform changes. The motivations behind this particular experiment likely include improving user experience, reducing the spread of misinformation, and potentially increasing user engagement and trust.

The Twitter edit button blue test is interesting, but it makes me think about how frustrating switching carriers still is. It’s like trying to navigate a labyrinth of forms and hidden fees when you just want to move your number. Companies need to streamline this process, especially when dealing with the complexity of keeping accounts and phone numbers consistent.

This whole ordeal, just like the Twitter edit button blue test, highlights the need for more user-friendly systems. why switching carriers still such pain is a great read on the issue, and it might offer some insight into the bigger picture.

The test phases and specific features being examined are likely designed to understand the full implications of allowing edits, considering various scenarios and user behaviors.

Historical Overview of Edit Button Discussions

Twitter has been grappling with the complexities of an edit button for several years. Early discussions centered around the challenges of content verification and the potential for abuse. The platform’s unique structure, prioritizing speed and immediacy, made implementing an edit button a complex technical and logistical undertaking. This cautious approach reflects a concern for maintaining the authenticity and immediacy that are core to Twitter’s identity.

Previous experiments and discussions surrounding the edit button, while not publicly documented in detail, likely shaped the current approach to testing.

Potential Motivations Behind the Experiment

Twitter’s motivation for testing the edit button likely stems from a confluence of factors. Improving user experience by enabling corrections and reducing the spread of misinformation are key drivers. The potential to increase user engagement, foster a sense of trust, and enhance the platform’s overall functionality are additional motivations. Furthermore, the platform may aim to adapt to changing social media trends, acknowledging user preferences and evolving communication patterns.

A successful edit button could help Twitter compete with platforms that already offer editing features.

Phases of the Test (if applicable)

While precise details about the phases of the test are not publicly available, it’s reasonable to assume that a phased approach is being employed. This would likely begin with a smaller, controlled group of users, allowing for observation of usage patterns and potential issues. Data collection and analysis during this phase would be critical to understanding the impact of the edit button on the platform.

Subsequent phases would likely involve expansion to a larger user base, allowing for further observation and refinement of the feature.

Features of the Edit Button Under Test, Twitter edit button blue test

The specific features being tested within the edit button experiment are likely multifaceted. These features could include time limits for edits, restrictions on the number of edits allowed, and mechanisms for preventing abuse. Additionally, the experiment may examine different levels of editability, for example, allowing edits for a limited time after posting or providing a more permanent edit option.

The aim is to balance user convenience with the need to maintain the integrity and authenticity of the platform’s content.

Public Perception and Reactions

The Twitter edit button test, a highly anticipated experiment, sparked a diverse range of reactions from users across the platform. Initial responses ranged from cautious optimism to outright skepticism, reflecting the multifaceted nature of the public’s relationship with social media and its perceived imperfections. Understanding these reactions is crucial to evaluating the potential impact of such a feature.Public sentiment about the edit button was heavily influenced by pre-existing anxieties and expectations regarding online interactions and the preservation of digital footprints.

The potential for misuse, abuse, and the erosion of trust were prominent concerns voiced by many users, adding layers of complexity to the overall evaluation.

Reactions to the Edit Button Test

Public reactions to the edit button test varied widely, reflecting diverse user experiences and expectations. A significant portion of users expressed concerns about the potential for manipulation and the difficulty in verifying authenticity. Conversely, others lauded the feature as a much-needed tool for correcting errors and improving communication clarity.

Common Themes and Sentiments

Several common themes emerged from the online discussions surrounding the edit button. A prevalent sentiment centered on the fear of manipulation and the potential for misuse of the feature. Concerns regarding the impact on trust and the authenticity of content were prominent. Users also discussed the practical implications of the edit button, such as its usability and potential for abuse.

Impact on User Engagement and Sentiment

The edit button test’s impact on user engagement and sentiment was mixed. Some users felt a heightened sense of responsibility, prompting a more cautious approach to posting. Others saw the edit button as a tool for increased clarity and efficiency, potentially leading to a more positive user experience.

Comparison of Reactions by User Groups

Analyzing reactions across different user groups reveals interesting patterns. Early adopters and frequent users often expressed more positive views, while less active users exhibited greater skepticism. Understanding these nuances is vital for tailoring future implementation strategies.

The Twitter edit button blue test is fascinating, but I’m also excited about a new book that’s coming out celebrating the unique art of graphics card box art. It’s going to be a visual feast, showcasing the creativity and design that goes into those often-overlooked pieces. Hopefully, this new book, a new book will celebrate graphics card box art , will inspire some of the same attention and excitement as the Twitter edit button blue test is generating.

I’m eager to see how this all plays out.

User Reaction Table

| Demographic | Reaction | Sentiment |

|---|---|---|

| Frequent Twitter Users (High Engagement) | Positive, eager to use the feature for clarification. | Optimistic, perceiving it as a practical tool. |

| Less Active Twitter Users (Low Engagement) | Cautious, skeptical about potential for abuse. | Neutral, waiting to see the full implementation. |

| Users Concerned About Online Safety | Skeptical, expressing distrust in the ability to verify authenticity. | Negative, worried about potential for manipulation. |

| Users Focused on Content Accuracy | Positive, recognizing the benefit of correcting errors. | Optimistic, valuing the feature’s ability to refine communication. |

| Users Primarily Focused on Networking | Mixed, some seeing value in correcting errors, others concerned about impact on interactions. | Neutral, viewing it as a potential tool for enhancing interactions. |

Technical Aspects of the Test

Twitter’s edit button experiment delves into the complexities of modifying already-published tweets. Understanding the technical implementation, potential limitations, and the impact on Twitter’s infrastructure is crucial to evaluating the feasibility and potential consequences of this feature. The user interface design and workflow will directly influence user adoption and experience.

Technical Implementation of the Edit Button

The implementation of an edit button requires significant backend and frontend modifications. Key aspects include:

- Data Storage and Versioning: The system needs to track different versions of a tweet, allowing users to revert to previous iterations. This necessitates a robust database schema to handle the historical data efficiently. A possible approach could involve creating separate timestamped versions of the tweet text and metadata within the database. This would enable a clear audit trail of edits and a way to revert to earlier versions.

For example, Twitter might use a technique similar to Git’s version control system for managing tweet revisions.

- API Modifications: The Twitter API needs modification to accommodate the edit button functionality. This will require new endpoints for creating, updating, and retrieving tweet versions. The API changes will need to be carefully designed to ensure data integrity and consistency. New methods will need to be integrated into the API to handle the addition of revision information to tweets.

- Frontend Integration: The user interface must be updated to incorporate the edit button. This includes design considerations for the edit form, display of previous versions, and visual cues for changes. The user interface must be intuitive and consistent with the existing Twitter design. An example could be a button labelled “Edit” appearing next to the tweet, enabling users to modify the tweet text and attachments.

The Twitter edit button blue test is fascinating, but ultimately, it’s just a tiny piece of a much larger puzzle. Security platformization, as discussed in this insightful article about threat vectors and the future of cyber resilience, threat vector why security platformization is the future of cyber resilience , is crucial to addressing the complex and ever-evolving landscape of online threats.

Even seemingly simple features like this edit button need robust security infrastructure to prevent misuse and protect user data, which highlights the need for more advanced security platforms.

Potential Limitations and Challenges

Several challenges arise in implementing an edit button, including:

- Data Integrity and Consistency: Maintaining the accuracy and consistency of the timeline of tweets, especially in the context of news or events, presents a significant challenge. The system must be designed to ensure that the edited tweet doesn’t disrupt the chronological order or misrepresent the original tweet’s intent. The system will need robust error handling and validation processes.

- Abuse Prevention: Misuse and abuse of the edit button, such as malicious edits or manipulation of public discourse, are potential concerns. Twitter needs to implement robust safeguards, including moderation and reporting mechanisms, to prevent this. The system should include controls to limit the number of edits per tweet or time period.

- Scalability: As Twitter’s user base and tweet volume grow, the infrastructure must be scalable to handle the increased load and maintain performance. Efficient database queries and caching mechanisms are crucial to manage the data volume effectively.

Impact on Platform Infrastructure and Database

Implementing an edit button will significantly impact Twitter’s infrastructure and database.

- Increased Database Complexity: The database will become more complex to accommodate the new versions of tweets and metadata. Additional storage space and potential performance degradation will need to be factored in. Storing historical versions of tweets will increase the database’s size and potential query times.

- Increased Server Load: The increased data volume and complexity will increase the server load. Twitter needs to anticipate this and design a scalable system to handle the load efficiently. The servers need to be prepared to handle requests for editing, retrieving, and displaying tweets, potentially requiring additional server resources.

User Interface Design

The user interface (UI) for the edit button should be intuitive and seamless.

- Clear Instructions: Instructions for using the edit button should be clear and easy to understand. Visual cues and prompts should guide users through the process, minimizing confusion and errors.

- Visual Feedback: Visual feedback should clearly indicate the changes being made to the tweet. This includes highlighting modified text and providing clear indication of the changes to the tweet’s metadata.

Workflow and Steps Involved

The workflow for using the edit button should be straightforward.

- Locate the Tweet: Identify the tweet to be edited.

- Initiate Edit: Click the edit button associated with the tweet.

- Make Changes: Modify the tweet text and metadata as needed.

- Save Changes: Click the save button to apply the changes.

Impact on Content Moderation and Misinformation: Twitter Edit Button Blue Test

The Twitter edit button experiment, while seemingly a simple feature, presents complex challenges for content moderation and misinformation control. The ability to alter previously published tweets raises significant concerns about the platform’s capacity to maintain a trustworthy information ecosystem. A key consideration is how this feature will interact with existing content moderation policies and procedures.The introduction of an edit button introduces the possibility of retroactive manipulation of information, potentially undermining the integrity of the historical record on the platform.

This could have serious repercussions for public discourse and the ability of users to trust the information they encounter. The need for robust systems to detect and address potential misuse becomes paramount.

Potential Impact on Content Moderation Efforts

The edit button’s implementation presents a significant challenge to existing content moderation strategies. The ability to edit tweets after publication necessitates a shift in the platform’s approach to content verification and fact-checking. Traditional methods of flagging and removing content based on its initial publication may become less effective, requiring a more nuanced and proactive approach to monitoring and responding to edits.

Potential Risks Associated with the Edit Button

The edit button introduces several potential risks, particularly concerning the spread of misinformation. Users could potentially edit false or misleading information to alter its original meaning or context, making it more difficult to identify and address false claims.

- Retroactive manipulation of information: A user could edit a tweet that initially contained misinformation to appear accurate, potentially deceiving readers who have already encountered the original tweet. This retroactive manipulation significantly complicates the task of verifying and evaluating content on the platform.

- Amplification of misinformation: The ease with which tweets can be edited could potentially lead to a rapid spread of misinformation. If an edited tweet is retweeted or shared, the false information could gain wider reach and credibility. The edit button’s potential for enabling this kind of retroactive amplification of misleading claims is a critical concern.

- Damage to public discourse: The ability to alter tweets after their initial publication could erode public trust in the platform’s information ecosystem. This could negatively impact public discourse and the exchange of ideas.

Potential Solutions and Mitigations

Addressing the potential risks requires a multifaceted approach. Transparency is crucial. Twitter should clearly communicate to users the implications of the edit button and the potential for abuse. A system for tracking edits, identifying patterns, and flagging potential abuse is also essential.

- Timestamping and edit history: Maintaining a detailed edit history for each tweet could help users assess the reliability of the information. This would include the original tweet, all subsequent edits, and the timestamp of each change.

- Enhanced content moderation algorithms: Algorithms need to be refined to identify and flag tweets that have been significantly edited to correct or obscure misinformation. This requires a significant investment in machine learning and natural language processing to detect these subtle changes.

- Community reporting mechanisms: Robust mechanisms for users to report suspicious edits are vital. These reporting systems should be easy to access and allow users to provide context and evidence to support their claims. A clear escalation path for reports is also needed.

Examples of Potential Misuse

The edit button could be misused in numerous ways. For instance, a politician could edit a tweet to remove a controversial statement. A news organization could edit a breaking news report to reflect new information.

- Political manipulation: A political candidate could edit a tweet to retract a controversial statement or change its meaning after it has gained traction. This could alter the narrative and potentially sway public opinion.

- Spreading misinformation: A user could initially post false information and then edit the tweet to appear accurate after it has been widely shared. This could effectively erase the trail of the original misinformation and create a new, misleading narrative.

- Fabricating events: A user could fabricate a tweet about a non-existent event and then edit it to reflect a more plausible scenario.

Framework for Handling Abuse and Misinformation

A robust framework is needed to address cases of abuse and misinformation after the implementation of the edit button. This framework should incorporate:

- Clear guidelines and policies: The platform must have clear guidelines regarding the use of the edit button, outlining acceptable and unacceptable uses.

- Automated detection systems: Develop algorithms to detect suspicious edits, identifying patterns and potential misuse.

- Human review process: Implement a system for human review of reported edits, allowing for context and nuance to be considered.

- Escalation procedures: Define a clear process for escalating reports to higher levels of moderation when necessary.

Comparison with Other Social Media Platforms

The Twitter edit button experiment is a fascinating case study, not just in terms of its potential impact on Twitter’s user base, but also in the broader context of how social media platforms approach content editing. Understanding how other platforms handle content changes provides valuable insights into the complexities of this issue, highlighting both the benefits and drawbacks of different approaches.Comparing Twitter’s proposed edit button to existing features on other social media platforms reveals a diverse landscape of solutions and trade-offs.

Different platforms prioritize varying aspects of content moderation, user experience, and the overall user ecosystem. This comparison helps to understand the nuances of the debate around the edit button, moving beyond simple binary discussions.

Existing Edit Button Features on Other Platforms

Different social media platforms have various ways to allow users to edit their posts. Some offer limited editing capabilities, while others offer more robust options. This diversity stems from the unique approaches each platform has developed to maintain user trust and control content quality.

- Facebook allows users to edit posts within a certain timeframe, typically up to a few hours. This provides a relatively simple solution, aligning with its focus on fostering community interactions and discussions.

- Instagram, while primarily visual, allows users to edit captions and some details of their posts. The editing timeframe is typically shorter than Facebook’s, reflecting Instagram’s focus on visually engaging content.

- Reddit, known for its community-driven approach, allows users to edit comments and posts within a specific time window, usually a few hours. This mechanism aims to foster collaborative discussion and information exchange.

- LinkedIn, a platform focused on professional networking, enables users to edit posts within a relatively short timeframe, emphasizing the importance of accurate and well-considered professional communication.

Strengths and Weaknesses of Different Approaches

The approaches to content editing vary greatly across social media platforms. The strengths and weaknesses of each method depend on the platform’s goals, user base, and the types of content shared.

- Limited Editing Windows: Platforms that restrict editing windows, such as Instagram, might limit the potential for misinformation spreading, as edits made after a certain time might not reflect the current context. However, this approach can also lead to a sense of finality, which might disincentivize users from sharing their thoughts.

- Robust Editing Tools: Platforms like Facebook, with more extensive editing options, allow users to better clarify their intentions or correct factual errors. This flexibility can foster better communication and trust, but it also increases the risk of users making edits for reasons other than correction, leading to the potential for manipulation.

Comparative Analysis Table

| Platform | Edit Button Feature | Strengths | Weaknesses |

|---|---|---|---|

| Proposed edit button | Increased user flexibility, allows for corrections, fosters a more conversational environment. | Potential for manipulation, increased difficulty in content moderation, increased risk of misinformation spread. | |

| Edit post within a timeframe | Allows for corrections, maintains a sense of accountability. | Limited editing capabilities, potentially disincentivizes users to carefully consider their posts initially. | |

| Limited editing capabilities | Maintains visual focus, reduces potential for misinformation. | Limited ability to correct errors, less flexibility for users. | |

| Edit comments/posts within a timeframe | Encourages community-driven corrections, fosters transparency. | Limited ability to modify entire posts, potential for manipulation of comments. | |

| Edit posts within a timeframe | Encourages professional communication, facilitates clarification. | Limited ability to make significant changes, potential for misrepresentation of ideas. |

Potential Future Implications

Twitter’s experiment with the edit button, while seemingly simple, holds significant implications for the platform’s future, user behavior, and the broader landscape of social media. The potential for increased accuracy and reduced damage from hasty tweets is balanced against concerns about the potential for manipulation and the difficulty of enforcing consistent standards. The implications extend beyond the immediate response to the test itself, affecting how users interact with the platform, the trust in the content shared, and the very nature of public discourse.

Long-Term Consequences of the Edit Button

The edit button’s lasting impact will depend heavily on how Twitter handles its implementation. If poorly managed, it could erode trust in the platform’s integrity. Users may become more hesitant to engage in public discourse, fearing their words will be misinterpreted or later edited to suit another narrative. Conversely, a carefully managed edit function could foster more thoughtful communication, encouraging users to refine their messages before hitting “tweet.” This could lead to a more mature and nuanced online conversation, but also potentially a greater pressure to present a perfect image online.

Impact on User Experience

The edit button has the potential to significantly alter the user experience. Users might feel more empowered to correct errors, clarifying their intentions and minimizing the spread of misinformation. However, it could also lead to a more chaotic environment, where the validity of past tweets becomes questionable. The constant possibility of editing might also create a sense of anxiety and distrust among users, particularly if the implementation isn’t transparent or consistently enforced.

Impact on Platform Policies and Guidelines

Twitter’s existing policies and guidelines will need to be adapted to accommodate the edit button. This includes clear definitions of what constitutes an acceptable edit, along with a process for handling disputes and appeals. Implementing a system of timestamps and version histories for edited tweets is crucial to maintaining transparency and accountability. Defining reasonable limits on edits, and how frequently edits are allowed, is critical for avoiding abuse.

Twitter’s Adaption Strategies

Twitter will need to develop sophisticated strategies to address the potential issues raised by the edit button. This includes a robust system for flagging potentially malicious edits, a system for determining the appropriate scope of edits, and a clear communication strategy for informing users about the edit button’s implementation. Proactive engagement with user feedback will be vital in refining the platform’s approach.

For instance, the introduction of a “finalized” status for tweets, signifying that further edits are no longer possible, could be a potential solution to address concerns about manipulating the timeline.

Future of Content Moderation

The edit button will undoubtedly impact content moderation strategies. Social media platforms will need to re-evaluate their approach to content moderation in the context of edited content. This will require developing new tools and protocols for identifying and addressing potentially harmful or misleading information, even after it has been edited. The challenge lies in striking a balance between allowing users to correct mistakes and preventing the misuse of the edit feature to spread misinformation or manipulate public perception.

Potential Impact on User Behavior

The Twitter edit button experiment is poised to significantly reshape user interactions on the platform. Understanding how users adapt to this new capability is crucial to predicting the overall impact on content quality, engagement, and the spread of misinformation. The introduction of an edit button introduces a level of dynamism and potential for user behavior change that is worth careful consideration.The edit button fundamentally alters the relationship between users and their posts.

This change from a permanent, immutable record to a more fluid, editable one necessitates a re-evaluation of the platform’s established norms and how users interact with them. This potential shift in behavior has implications that extend beyond individual user actions and affect the platform’s overall health and functionality.

Influence on User Engagement

The ability to correct errors or refine statements immediately after posting could lead to a notable increase in user engagement. Users might feel more comfortable sharing initial thoughts or drafts, knowing they can amend them if needed. This could encourage more frequent posting and interaction. Conversely, the ease of editing might also lead to a decreased sense of urgency in producing high-quality, well-considered content.

Impact on User Trust and Credibility

The edit button presents a complex challenge to user trust and credibility. While it allows for corrections of factual errors, it also introduces the potential for intentional manipulation or the distortion of past statements. Users might become more cautious about accepting information at face value, especially if the context surrounding the edits is not immediately apparent. The potential for users to edit their statements to better suit their current perspective, or even to retroactively tailor their narratives, is a major concern.

Potential for Increased Misinformation

While the edit button offers a tool for correcting errors, it also provides an avenue for the spread of misinformation. Malicious actors could potentially edit their initial posts to propagate false or misleading information after it has been widely shared. This dynamic could create a significant challenge in distinguishing between genuine corrections and deliberate manipulation. Existing mechanisms for content moderation may need to be adjusted to address this evolving threat.

Examples of User Interaction Changes

- Increased posting frequency, as users feel more comfortable sharing initial drafts knowing they can refine them later.

- Reduced immediacy in sharing responses to events, as users might wait to see how a conversation evolves before committing to a definitive statement.

- Increased scrutiny of past statements, leading users to re-evaluate prior content and its context.

- Potential for decreased user trust in content authenticity, due to the ability to retroactively edit statements.

Conclusive Thoughts

In conclusion, Twitter’s edit button blue test is a complex experiment with far-reaching implications. From the historical context to the potential impact on user behavior and content moderation, this test sparks important discussions about the evolving nature of online communication. The reactions, the technical challenges, and the potential for misuse all point towards a nuanced understanding of the complexities surrounding social media platforms.

As we move forward, it’s clear that the edit button, and the broader concept of online content editing, will continue to be a central topic of discussion.