YouTube algorithm suggestions radicalization mozilla is a complex issue. The YouTube algorithm, with its powerful ability to shape content discovery, intersects with online radicalization, a growing concern. Mozilla, a prominent player in online safety, offers valuable insights and tools. This exploration delves into the intricate workings of the YouTube algorithm, examining its role in amplifying or suppressing radical content.

We’ll also analyze how Mozilla’s initiatives address this growing problem. This discussion will examine the potential for bias in the algorithm and how it might contribute to the spread of extremist views.

The algorithm’s prioritization of trending videos and user engagement metrics can have a significant impact on the content users encounter. This, in turn, can affect the likelihood of exposure to radical ideologies. Mozilla’s efforts to combat misinformation and harmful content provide a crucial counterpoint. The intersection of these factors demands careful consideration and proactive strategies.

YouTube Algorithm Impact on Content: Youtube Algorithm Suggestions Radicalization Mozilla

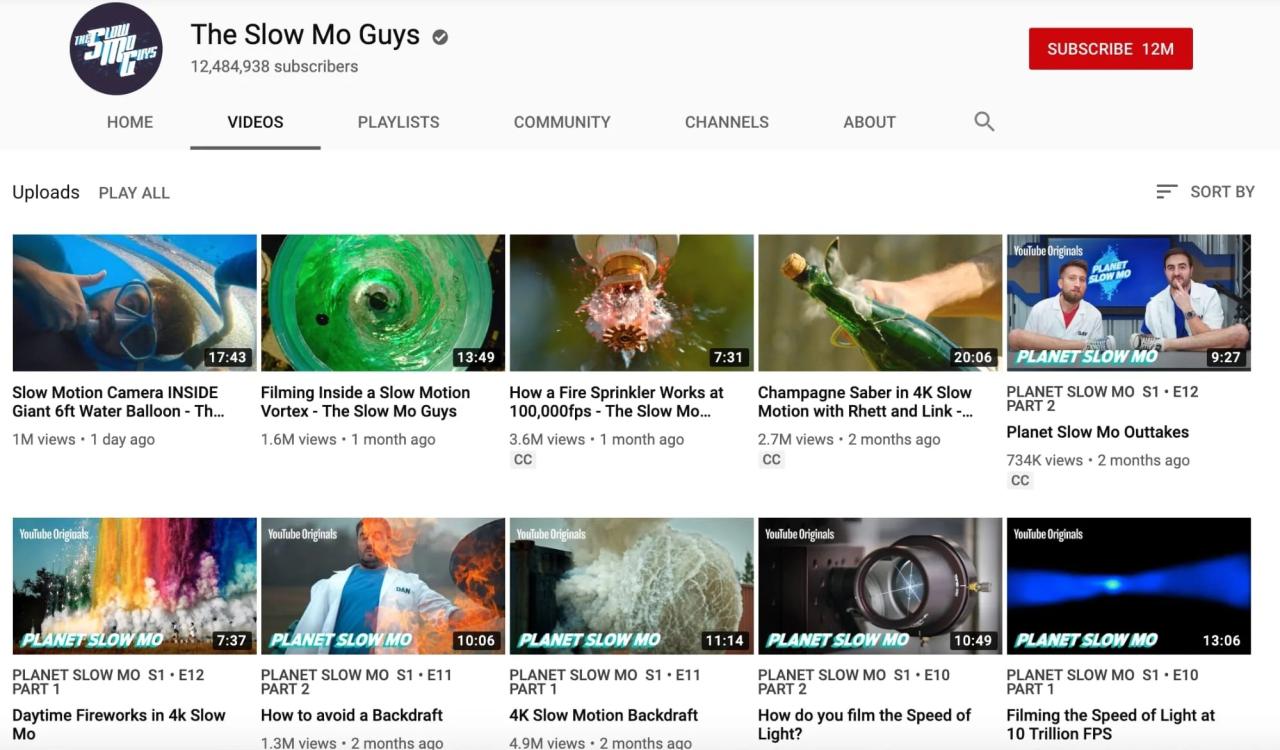

The YouTube algorithm is a complex system that dictates which videos users see in their feeds and recommendations. Understanding its inner workings is crucial for creators looking to maximize visibility and engagement. This intricate process impacts everything from initial views to long-term channel growth. The algorithm is constantly evolving, responding to user behavior and content trends.The algorithm prioritizes a multitude of factors, dynamically adjusting its recommendations to provide users with a tailored experience.

This means content that resonates with a user’s viewing history and interests will be more prominent. The algorithm also considers the broader context of the platform, such as trending topics and emerging creators, to ensure users are exposed to diverse and engaging content. This constantly shifting landscape requires creators to adapt their strategies and understand the algorithm’s preferences.

Content Prioritization

The YouTube algorithm prioritizes content that exhibits high user engagement. This includes metrics like watch time, likes, comments, and shares. Videos that are highly engaging are more likely to be recommended to a wider audience, leading to a positive feedback loop. Furthermore, trending videos, those that gain popularity rapidly due to viral or timely content, receive significant algorithmic boosts.

This dynamic prioritization means that newer, trending content frequently appears prominently in recommendations, sometimes eclipsing content from established channels.

User Engagement Metrics

Several user engagement metrics significantly influence the YouTube algorithm’s decisions. Watch time is a critical factor, with longer watch times indicating greater user interest. Engagement metrics like likes, comments, and shares further signal content quality and relevance. These factors are combined to assess the overall value and appeal of a video. A video that garners significant watch time and positive engagement signals a strong connection with the audience, potentially leading to more recommendations.

Content Characteristics and Amplification/Suppression

The algorithm analyzes various content characteristics, including video length, title, description, and tags. Content that aligns with the platform’s guidelines and community standards is more likely to be amplified. Conversely, content that violates these standards, such as promoting harmful ideologies or engaging in spammy behavior, may be suppressed or even removed. For instance, videos that are excessively long or lack engaging visuals may be less likely to receive prominent recommendations.

Conversely, concise and visually appealing videos, often trending in nature, are often prioritized.

Factors Contributing to Algorithm Decision-Making

The algorithm’s decision-making process is influenced by a multitude of interconnected factors. These include user search queries, viewing history, watch time, engagement metrics, and the characteristics of the content itself. The algorithm also considers the channel’s history, subscriber count, and past performance. These multifaceted considerations contribute to the complexity of the YouTube algorithm and its impact on content visibility.

Potential for Bias in the YouTube Algorithm

Algorithmic bias can manifest in various ways, impacting the visibility of specific content types or creators. This bias can be unintentional, stemming from the algorithm’s training data or the design of its various features. It can also be intentional, potentially leading to the suppression or promotion of specific viewpoints or demographics. For example, an algorithm trained on data skewed towards a particular geographic region might inadvertently favor content from that region over others.

The presence of bias in the algorithm can lead to uneven distribution of recommendations, limiting exposure for creators whose content may otherwise be engaging and valuable.

Radicalization Online

The digital age has created unprecedented opportunities for the spread of extremist ideologies. Online platforms, while offering connection and information, have also become fertile ground for radicalization. This process, often subtle and insidious, involves individuals or groups using the internet to promote specific ideologies, influencing perceptions and behaviors. Understanding the factors driving online radicalization, the methods employed, and the characteristics of the content involved is crucial for developing effective countermeasures.Online radicalization is a complex phenomenon influenced by a multitude of interconnected factors.

These include pre-existing vulnerabilities, perceived grievances, and the individual’s engagement with specific online communities and content. The availability of readily accessible information, often presented in a biased or distorted manner, can be particularly impactful. This is especially true for vulnerable individuals who may lack critical thinking skills or experience a sense of isolation or alienation.

Recent discussions about YouTube’s algorithm and its potential for radicalization, particularly through Mozilla’s research, are fascinating. It makes you wonder how such algorithms can impact our choices and how that relates to how religious leaders like Pope Francis are using technology like smartphones and texting. For example, Pope Francis’ use of smartphones and texting is quite interesting in the broader context of digital influence, and prompts questions about the algorithms that curate our information.

Ultimately, the potential for algorithms to radicalize remains a significant concern, especially in the digital age.

Factors Contributing to Online Radicalization, Youtube algorithm suggestions radicalization mozilla

Individuals’ pre-existing vulnerabilities, including psychological traits, socio-economic circumstances, and past experiences, can increase susceptibility to radical ideologies. A lack of social support, a sense of alienation, or a desire for belonging can make individuals more receptive to online groups promising acceptance and purpose. Exposure to grievances and perceived injustices, whether real or perceived, can fuel resentment and a desire for change, which extremist groups often exploit.

The internet’s accessibility and anonymity can embolden individuals who might otherwise be hesitant to express or act on their beliefs.

I’ve been digging into the Mozilla research on YouTube algorithm suggestions and radicalization, and it’s pretty fascinating stuff. It’s concerning how easily these algorithms can push users toward extreme viewpoints. Fortunately, there are deals out there to help you escape the digital echo chamber. Check out this amazing Audible Black Friday sale: audible black friday sale get audible premium plus for only 6 a month.

Listening to diverse perspectives, even through audiobooks, can help broaden your horizons and challenge your existing biases. Ultimately, understanding the YouTube algorithm’s influence is key to navigating the online world more critically.

Methods of Promoting Radical Ideologies Online

Extremist groups and individuals utilize various methods to spread their messages and influence potential recruits. These methods include the creation of targeted online communities, the dissemination of propaganda through social media, and the exploitation of vulnerable individuals. The use of social engineering techniques, such as manipulation and persuasion, plays a significant role in recruitment. A significant element involves crafting narratives that resonate with potential recruits’ experiences and aspirations, often presenting simplistic solutions to complex problems.

This can include the manipulation of historical events, the use of emotionally charged language, and the creation of false or misleading information.

Characteristics of Radicalizing Online Content

Certain characteristics of online content can contribute to radicalization. Content that presents a simplistic view of complex issues, emphasizes grievances and injustices, and promotes a sense of victimhood or exceptionalism can be particularly persuasive. The use of emotionally charged language, conspiracy theories, and the manipulation of information are common tactics. The repetition of messages and the reinforcement of negative perceptions can create a sense of urgency and certainty.

This often involves the deliberate avoidance of nuance and complexity, presenting a one-sided perspective.

Content Sharing and Amplification

The sharing and amplification of radical content are critical components of the online radicalization process. Social media algorithms, designed to maximize engagement, can inadvertently amplify radical messages, exposing them to a wider audience. The use of hashtags, online forums, and private messaging groups facilitates the rapid spread of information, allowing extremist groups to cultivate a network of supporters.

The creation of echo chambers and the targeted dissemination of information through these online communities contribute to the reinforcement of extremist ideologies.

Comparison of Radicalization Processes Across Platforms

Different online platforms exhibit unique characteristics that influence the radicalization process. For example, social media platforms often utilize algorithms that prioritize engagement and virality, potentially amplifying radical content. Dedicated forums and encrypted messaging platforms, on the other hand, might facilitate more direct interaction and recruitment by extremist groups. The anonymity offered by certain platforms can embolden individuals to express or act on their radical beliefs.

Understanding these platform-specific characteristics is crucial for developing targeted countermeasures.

Mozilla’s Role in Online Safety

Mozilla, a non-profit organization dedicated to the open web, plays a crucial role in fostering a safer and more trustworthy online environment. Their initiatives extend beyond simply developing web browsers, encompassing a broader commitment to digital well-being and combating online harms. This commitment includes initiatives to combat misinformation and harmful content, aiming to create a more positive and productive online experience for all.Mozilla’s commitment to online safety stems from a deep understanding of the potential for technology to be used for harm, as well as the potential for technology to be used for good.

Their actions are guided by the belief that a healthy online environment is essential for a healthy society, and that technology should be used to promote positive values and prevent negative outcomes. Their efforts are driven by a strong belief in the power of an open and accessible internet, and in the ability of individuals to use this power for positive purposes.

I’ve been reading about how YouTube’s algorithm suggestions might be getting a little too radical, and Mozilla is looking into it. It’s fascinating to see how these algorithms shape our online experiences. Switching to a USB-C port on the new iPhone 15 is a pretty simple process, but finding the right guide can make all the difference, especially when you’re dealing with technical issues.

Check out this handy apple iphone 15 usb c switch guide for a smooth transition. Ultimately, the potential for algorithmic bias in content recommendations is something we should all be aware of, and it’s a critical aspect of this ongoing discussion about the YouTube algorithm.

Mozilla’s Initiatives for Online Safety

Mozilla has developed various tools and resources aimed at increasing online safety and combating misinformation. These initiatives often target the spread of harmful content and the promotion of responsible digital citizenship. A key component of their strategy is educating users on recognizing and avoiding harmful content, fostering a sense of digital literacy, and equipping them with the tools necessary to protect themselves.

Mozilla’s Efforts to Address Misinformation

Mozilla actively addresses misinformation through research, development, and partnerships. Their focus includes developing tools to help users identify potentially false or misleading information online, thereby empowering them to make informed decisions. Mozilla also collaborates with researchers and educators to raise awareness about the dangers of misinformation. For instance, their work has explored how algorithms can amplify or suppress particular viewpoints, contributing to a better understanding of the dynamics of information dissemination.

Mozilla’s Tools and Resources for Combating Online Radicalization

Mozilla recognizes the complex relationship between online radicalization and the spread of misinformation. Their approach to combating online radicalization includes educational initiatives and the development of tools to help users critically evaluate online content. By providing tools to discern credible information from misleading content, they empower users to make informed decisions and avoid being exposed to potentially harmful influences.

Mozilla also advocates for media literacy education, helping individuals develop critical thinking skills and build resilience against manipulation.

Specific Actions Regarding the YouTube Algorithm

Mozilla has not publicly detailed specific actions against the YouTube algorithm related to radicalization. However, their general approach involves advocating for transparency and accountability in online platforms’ algorithms, recognizing that the design and implementation of these algorithms can have a significant impact on the spread of harmful content. Their general stance is to encourage platforms to be more mindful of the potential consequences of their algorithms on users and society.

Mozilla’s Efforts Summarized

| Initiative | Description | Impact on Online Safety |

|---|---|---|

| Developing tools for identifying misinformation | Creating tools to help users detect false or misleading information. | Empowers users to make informed decisions and avoid harmful content. |

| Promoting media literacy | Educating users on critical thinking and evaluating online content. | Builds resilience against manipulation and fosters responsible digital citizenship. |

| Advocating for algorithm transparency | Encouraging platforms to be transparent about how their algorithms function. | Helps prevent the unintended spread of harmful content. |

Intersection of YouTube Algorithm and Radicalization

The YouTube algorithm, a powerful tool for content discovery, has the potential to both amplify and suppress radical content. Its complex workings, while intended to provide users with relevant and engaging material, can inadvertently create echo chambers and expose individuals to harmful ideologies. This intersection requires careful examination to understand the potential for radicalization and the role of platforms like YouTube in mitigating such risks.The YouTube algorithm’s impact on the spread of radical content is multifaceted.

Its prioritization of certain content types can have a significant effect on what users see and engage with, influencing their exposure to extremist views. This, in turn, has implications for individual users and society as a whole.

Categorization of Radical Content on YouTube

Understanding the various forms of radical content is crucial to evaluating the algorithm’s potential role in its dissemination. Different types of radical content often present themselves in diverse forms, making identification and categorization a complex challenge.

| Category | Description | Examples |

|---|---|---|

| Hate Speech | Content that attacks or demeans individuals or groups based on attributes like race, religion, or nationality. | Vicious online attacks targeting minorities, inflammatory rhetoric against particular groups. |

| Extremist Ideologies | Content advocating for extreme political, social, or religious views. | Content promoting violence, revolution, or intolerance towards specific ideologies. |

| Propaganda | Content designed to manipulate or persuade audiences toward a particular viewpoint, often with misleading information. | Dissemination of fabricated news, biased reporting to sway opinions. |

| Incitement to Violence | Content that encourages or glorifies acts of violence or terrorism. | Videos showcasing violent attacks, recruitment materials for extremist groups. |

| Misinformation/Disinformation | Content containing false or misleading information that is intentionally spread to harm or deceive others. | Spreading conspiracy theories, fabricated news about specific groups. |

Prioritized Content Types by YouTube Algorithm

YouTube’s algorithm prioritizes content based on various factors, including user engagement, watch time, and trending topics. This prioritization can unintentionally favor content that is visually compelling or emotionally charged, even if it contains harmful or radical elements. The algorithm’s reliance on engagement metrics can lead to a cycle of escalating content, as attention-grabbing content tends to receive more views, further pushing it to the top of recommendations.

Amplification and Suppression of Radical Content

The YouTube algorithm’s ability to amplify or suppress radical content depends on numerous variables. While the algorithm may aim to suppress harmful content, its capacity to do so is limited by its reliance on user signals and the inherent difficulty in identifying and classifying radical material. Algorithms, by their nature, are not sentient beings and may misinterpret content, leading to the inadvertent promotion of radical ideologies.

Spread of Extremist Views through Recommendations

YouTube’s recommendations, driven by the algorithm, can expose users to extremist content that they might not otherwise encounter. This targeted exposure can be particularly concerning for susceptible individuals, leading to a gradual adoption of extremist views or the reinforcement of existing biases. The algorithm, by prioritizing engagement metrics, can inadvertently create echo chambers where individuals are surrounded by like-minded views and exposed to increasingly radical content.

Relationship between Algorithm and Mozilla Initiatives

Mozilla’s initiatives, like its work on combating online hate speech, directly intersect with YouTube’s algorithm. Mozilla’s research and advocacy can inform YouTube’s understanding of radical content and its spread, potentially leading to adjustments in the algorithm to reduce the visibility of such content. These initiatives work towards the goal of creating a safer online environment, where users are not subjected to harmful content through the algorithms of online platforms.

Illustrative Examples of Radical Content

Identifying and understanding radical content online is crucial for mitigating its harmful effects. This involves recognizing various forms of expression, from subtle incitement to blatant calls for violence. A nuanced approach is needed, as the line between controversial opinion and dangerous advocacy can be blurred. This section presents illustrative examples, analyzing their potential impact and context.Understanding the strategies employed to disseminate radical content is equally important.

This involves examining the platforms used, the targeting of specific audiences, and the techniques used to manipulate and influence. Recognizing these tactics is a vital step in countering the spread of harmful ideologies.

Examples of Online Content Promoting Violence

Content that directly promotes violence, or incites hatred against specific groups, poses a significant risk. This type of content often utilizes inflammatory language, graphic imagery, and persuasive rhetoric to evoke strong emotional responses in viewers. The context and potential audience for such content often include individuals already predisposed to violence or those seeking validation for their existing biases.

| Example Type | Detailed Description | Potential Impact | Context & Audience | Promotion Strategies |

|---|---|---|---|---|

| Online Hate Speech | A social media post containing hateful rhetoric directed at a particular ethnic or religious group, using derogatory language and inciting prejudice. The post might include violent imagery, such as symbols associated with hate groups. | Can lead to real-world violence and discrimination, fueling existing biases and prejudices. It can also normalize hatred and create an environment of fear and intimidation. | Targeted at individuals who already harbor prejudice or those seeking validation for their existing biases. The content might be shared in groups or communities with pre-existing hostile sentiments. | Often shared through social media platforms, particularly those with lax moderation policies. Content creators may utilize hashtags to increase visibility and reach a broader audience. |

| Instructional Videos | Videos showcasing methods of bomb-making or other forms of violence, often presented as DIY tutorials or “how-to” guides. The content may include detailed instructions and graphic demonstrations. | Can directly equip individuals with the knowledge and skills to carry out violent acts. The potential for harm is substantial, as such videos provide clear and detailed instructions. | Attracts individuals interested in violence, often those with pre-existing tendencies or motivations. The content may be targeted towards individuals seeking a sense of power or control. | Hidden behind layers of encryption or distributed through encrypted messaging platforms, making detection and removal more challenging. Utilizing coded language or misleading titles can also conceal the true nature of the content. |

| Recruitment Propaganda | A website or online forum designed to attract individuals to a particular extremist group. The content may include narratives of victimhood, promises of belonging, and calls for action. | Can effectively radicalize individuals and recruit them to extremist groups. The content aims to exploit vulnerabilities and offer a sense of purpose. | Targeted towards individuals who feel marginalized, disenfranchised, or seeking a sense of belonging. This type of content may exploit personal struggles or grievances to attract susceptible individuals. | May utilize sophisticated social engineering techniques, such as creating believable narratives and portraying the group as a solution to perceived problems. |

Examples of Content Promoting Misinformation

Misinformation, while not always directly violent, can still have severe consequences. It can manipulate public perception, erode trust in institutions, and contribute to harmful social divisions. Such content often spreads through social media, using emotionally charged language and misleading narratives.

| Example Type | Detailed Description | Potential Impact | Context & Audience | Promotion Strategies |

|---|---|---|---|---|

| Disinformation Campaigns | A coordinated effort to spread false information about a particular political candidate or social issue, using various online platforms. The content often presents a simplified or biased perspective. | Can significantly impact public opinion and voting behavior. It can also erode trust in legitimate sources of information. | Targeted at a broad audience, seeking to sway public opinion on specific issues or candidates. The content may exploit existing biases and concerns within the community. | Leverages the power of social media, exploiting algorithms to maximize reach and engagement. Content creators may utilize bots or coordinated efforts to amplify the spread of misinformation. |

| Fabricated News Articles | Fake news articles designed to spread false narratives about a particular topic or event. The articles often contain misleading headlines and sensationalized language. | Can lead to public confusion, distrust, and potentially dangerous actions. | Targeted at a broad audience, seeking to exploit existing anxieties or interests. The content might appeal to individuals who lack critical thinking skills. | Often relies on clickbait titles and emotionally charged language to attract attention. The articles may be designed to appear credible, mimicking legitimate news outlets. |

Potential Mitigation Strategies

Combating the spread of radical content online requires a multifaceted approach that involves not only platform responsibility but also user engagement and community participation. YouTube, as a platform with significant global reach, must actively implement strategies to detect and remove harmful content while fostering a safe environment for its users. This necessitates a proactive rather than reactive approach, focusing on prevention as much as remediation.Effective mitigation strategies need to address the underlying mechanisms driving radicalization and the methods used to disseminate such content.

This includes understanding how algorithms contribute to the problem and developing countermeasures that disrupt the cycle of radicalization. It’s not simply about removing content; it’s about understanding the context and intent behind it, and how to proactively prevent its resurgence.

Content Moderation Policy Enhancements

YouTube’s current content moderation policies need significant enhancement. These improvements should encompass a more sophisticated approach to identifying and removing radical content, incorporating machine learning and human oversight in a balanced way. A crucial component is the development of a clear and publicly accessible definition of radical content. This definition must go beyond simple categorization and include nuanced analysis of the intent and potential harm of the content.

- Defining Radical Content: A clear, transparent definition of radical content is crucial. This definition should include not only explicit calls to violence or hatred but also the subtle cues, propagandistic language, and manipulative rhetoric often used to incite extremism. It should be consistently applied to all content regardless of origin or user group. Such a definition should be collaboratively developed, engaging experts in relevant fields and considering international legal frameworks to ensure its applicability and impartiality.

- Multi-Layered Content Review: Implementing a multi-layered review process can improve the effectiveness of content moderation. This involves using automated systems to identify potential radical content initially, followed by a thorough review by human moderators. This ensures a balance between efficiency and accuracy, preventing the automated systems from becoming overwhelmed or misclassifying benign content as harmful.

- Continuous Monitoring and Adaptation: Content moderation policies should not be static. They need to be continuously monitored and adapted to reflect emerging trends in radical content and the evolving tactics of extremist groups. This includes using real-time data analysis to identify emerging threats and adapting policies to counter them effectively.

User Reporting and Community Moderation

Empowering users to report radical content is a critical component of effective mitigation. The reporting mechanism should be intuitive, easily accessible, and provide clear guidelines for reporting different types of harmful content. Furthermore, the platform should encourage a culture of responsible reporting and discourage the abuse of the reporting system.

- Accessible Reporting Mechanisms: YouTube should ensure its reporting mechanisms are user-friendly, multilingual, and accessible to all users. This includes clear instructions and examples of different types of radical content. An important element is providing feedback to users on the outcome of their reports.

- Community Guidelines Enforcement: Community guidelines should be actively enforced to foster a safe and inclusive environment. Users who repeatedly violate these guidelines should face appropriate consequences, including account restrictions or suspensions. Transparency and clear communication are key in this process, enabling users to understand the reasoning behind any actions taken.

- Training for Moderators: YouTube moderators should undergo comprehensive training to equip them with the knowledge and skills necessary to identify and assess radical content effectively. This includes training in recognizing different forms of manipulation and propaganda, as well as understanding the psychological factors involved in radicalization.

Automated Detection and Removal Methods

Developing robust automated systems for detecting radical content is essential to scale the mitigation efforts. These systems must be continuously updated and refined to adapt to the ever-changing nature of online radicalization.

- Machine Learning Algorithms: Advanced machine learning algorithms can analyze vast amounts of data to identify patterns and anomalies indicative of radical content. These algorithms should be continuously trained and evaluated to ensure their accuracy and prevent bias. The training data should be diverse and representative of the different types of content present on the platform.

- Filtering: While simple filtering can be effective for identifying obvious forms of radical content, it’s crucial to supplement it with more sophisticated methods. This is because extremists often use coded language and euphemisms, making simple detection inadequate. filters should be combined with context analysis and user behavior patterns.

- Contextual Analysis: Analyzing the context surrounding content is critical to identify the intent and potential harm. This includes examining the surrounding comments, the user’s history, and the overall environment in which the content is shared. This sophisticated analysis can distinguish between genuine expressions of opinion and radical messages.

Challenges in Implementation

Implementing these strategies will face several challenges. The dynamic nature of radical content, the complexity of online interactions, and the ethical considerations surrounding content moderation all contribute to the difficulty.

- Defining “Radical” Content: Achieving a universally agreed-upon definition of radical content that balances freedom of expression and safety is a significant challenge. Different cultures and communities may have varying interpretations of what constitutes radical content.

- Maintaining Accuracy and Speed: The constant evolution of online radicalization requires ongoing adaptation of automated detection systems. Maintaining the accuracy and speed of these systems is crucial to keeping pace with the threat.

- Balancing Free Speech and Safety: The line between restricting freedom of expression and ensuring user safety is delicate. Strategies must ensure that legitimate speech is not stifled while effectively mitigating the spread of harmful content.

Closing Summary

In conclusion, YouTube algorithm suggestions radicalization mozilla highlights a crucial intersection of technology, ideology, and safety. The algorithm’s power to shape online experiences, coupled with the challenges of online radicalization, demands a multi-faceted approach. Mozilla’s efforts, alongside improved content moderation policies and user awareness, are vital in mitigating the risks. This discussion underscores the need for ongoing vigilance and innovation in tackling online radicalization.